You Look Like a Thing and I Love You, book review: The weird side of AI

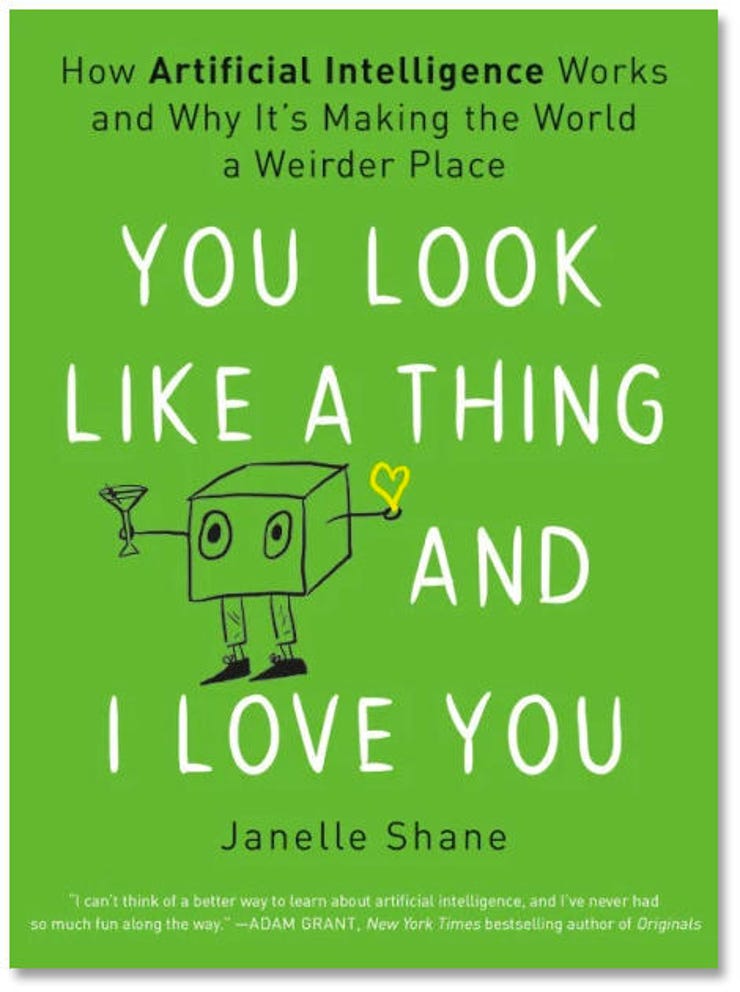

You Look Like a Thing and I Love You: How Artificial Intelligence Works and Why It's Making the World a Weirder Place • By Janelle Shane • Little, Brown • 260 pages • ISBN: 978-0-316-52524-4 • $24.98

There's a frequently-told perhaps-urban-legend about a military project to develop an AI that could reliably detect tanks in photos. Take a dataset of 200 photos, feed the AI half of it for training, and test on the rest. Result: 100% accuracy. But in a military retest, the AI scores no better than chance -- instead of detecting tanks, the AI detected the background difference between cloudy and sunny days.

SEE: How to implement AI and machine learning (ZDNet special report) | Download the report as a PDF (TechRepublic)

The story appeals for two reasons. First, because we can mock the flawed technology. Second, because it encapsulates an underlying truth about the kinds of mistakes AIs make. In You Look Like a Thing and I Love You, research scientist Janelle Shane calls this type of AI error a 'shortcut', and gives many more examples. One of her AIs, for example, figures out that the easiest way to keep humans from entering one of two hallways is simply to kill them all...

I first noticed Shane on Twitter, after she sent out a request for pictures of sheep in strange places -- "I want to prank an AI," she said. The results highlighted the hilarious problems that result from finding the limits of artificial comprehension. Paint the sheep orange and put them in a meadow, and the AI thinks they're flowers. Put them in a tree, it tags them as 'birds'. The uncertainty about how they make these determinations is why we shouldn't blindly trust AIs when they're turned loose on problems without definitive right answers -- like recruitment, or decisions in the criminal justice system. Plus, current AIs over-report giraffes. It's a thing.

A question of trust

For much of the book, Shane explains how AIs function, how they learn, and how they can be fooled, breaking up her text with intermediate results and many small cartoons. Finally, she settles into the most important question: how do we know when to trust an AI? Given that the narrower the problem you give it, the smarter the AI seems to be, the first question is the breadth and difficulty of the problem it's being asked to solve. And the corollary: is the AI solving the problem you think it is? Reading Shane, you conclude, like Christian Wolmar and Meredith Broussard, that self-driving cars are unlikely to fill our roads any time soon: it's just too hard, too broad, and too variable a problem -- as is detecting online hate speech. In a reverse Turing test, Shane notes that on social media an AI chatbot is better at detecting other AI chatbots than it is at detecting humans.

Enter humans. As Shane points out, and two other recent books -- Ghost Work by Mary Gray and Siddharth Suri, and Behind the Screen by Sarah T. Roberts -- make clear, much of today's 'AI' relies heavily on humans. Sometimes, like a cheating psychic, the reasoning is 'we can't get it to work right now, this is just a stopgap'; sometimes, it's legitimate to exploit the best combination of human intelligence and AI speed and scale.

Claiming you use AI, even pretend-AI, is good marketing, whether to politicians, venture capitalists or the public. So your next question should be: 'is this really an AI?' If the AI displays memory, there's a human in there somewhere. Humans are also needed to correct bias in training datasets, and to counteract the unwanted effects of interaction with the environment (such as the unconstrained pricing algorithms that led two Amazon sellers to overcharge thousands for an unremarkable used book).

In the end, Shane says, expect AI to become your partner, not your replacement.

RECENT AND RELATED CONTENT

Mozilla partners with Element AI to spearhead ethical artificial intelligence

AI can now read the thoughts of paralysed patients as they imagine they are writing

Facial recognition: This new AI tool can spot when you are nervous or confused

Nurse robot set to make the rounds at major hospitals

Artificial intelligence ethics policy (TechRepublic Premium)

Read more book reviews

- Permanent Record, book review: The whistleblower's tale

- The Big Nine, book review: Visions of an AI-dominated future

- Tools and Weapons, book review: Tech companies, governments and smart regulation

- The Smart-Enough City, book review: Putting people first

- The Twenty-Six Words that Created the Internet, book review: The biography of a law