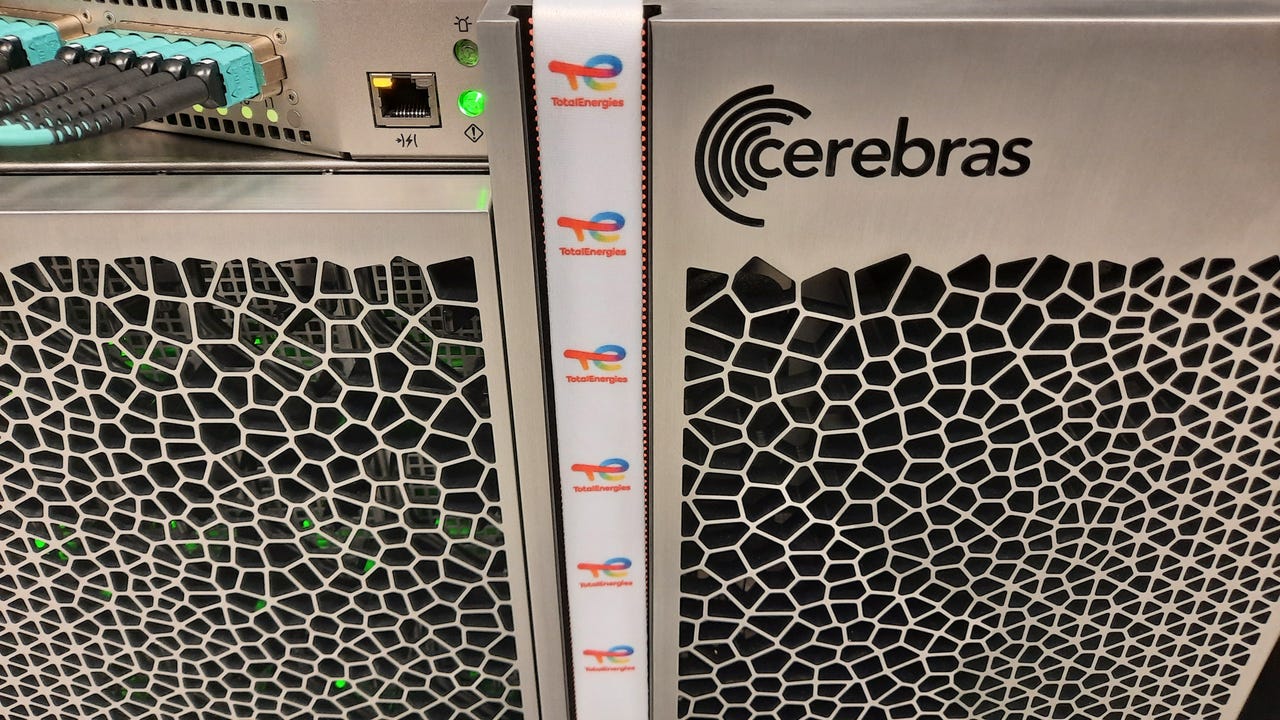

AI computer maker Cerebras nabs TotalEnergies SE as first energy sector customer

Cerebras Systems, the San Jose, California-based startup that makes computers dedicated to processing deep learning algorithms and other large-scale scientific computing tasks, Wednesday morning announced it has sold its first "CS-2" computer to TotalEnergies, the 98-year-old, Paris-based energy exploration and production company.

Artificial Intelligence

It is the first win for the young AI startup in the energy sector.

"The energy sector has historically been a monster consumer of compute, and they've generally been quiet about it," said Cerebras co-founder and CEO Andrew Feldman in an interview with ZDNet. "The energy market is four times the size of the market that Google and all the rest play in -- it's unbelievably big," he said, referring to the online advertising and shopping market.

"Our engineers are quite excited," said Dr. Vincent Saubestre, who is CEO of TotalEnergies Research and Technologies, "because the Cerebras machines are designed for machine learning, and informing the models to design new molecules, to design new materials, to simulate things that would take much longer" using other computers. Based in Houston, Texas, the research facility is where the CS-2 system has been in testing. Saubestre was speaking in the same interview with ZDNet as Feldman.

He added, "We are in very different fields: we bring subject-matter experts, they bring hardware that is untested in this industry."

The TotalEnergies research office is tasked with evaluating new machines to augment and ultimately take over from, TotalEnergies's existing systems.

"We are the test pilots of new machines in Houston, the Top Gun school," as Saubestre characterizes the office.

Also: AI is changing the entire nature of compute

TotalEnergies is a devotee of really big computers, and the use of the Cerebras CS-2 comes amidst plenty of testing, and building, of supercomputing systems. TotalEnergies's Pangea III is the 29th largest supercomputer in the world, based on IBM machines. It is the largest privately-owned supercomputer, said Saubestre.

Cerebras, in August of 2019, unveiled the world's largest chip, the "Wafer-scale engine," or WSE, dedicated to algorithmic processing heavy on things such as matrix multiplication.

The company secured early business from government labs in 2019, including the U.S. Department of Energy's Argonne National Laboratory and Lawrence Livermore National Laboratory.

The first project tested on the CS-2 is a "seismic modeling" task that is used to detect where one should drill for oil and gas. That work is being detailed Wednesday in a presentation by TotalEnergies researchers at the 2022 Energy HPC Conference at Rice University in Houston.

The work involves large seismic images, where acoustic waves are bounced off the surface of underwater surfaces to gauge the landscape, "what is oil, what is not oil, what is gas, what is not gas." Large simulations then take the place of the porous media in which oil and gas are stored.

"It's essentially inverting large matrices and simulating a physical phenomenon," said Saubestre. Additional details are related in a blog post by Cerebras.

Also: Drug giant GlaxoSmithKline became one of its first commercial customers in 2020

Feldman said that running against a single Nvidia A100 GPU, the Cerebras chip, which has 56 times the silicon area of the GPU, and 12,733 times the memory bandwidth, was able to beat the performance of the Nvidia part by 100 times.

"We demonstrated a 100x speedup because of memory bandwidth, and that these workloads are a combination of AI, and other HPC tasks, are extremely computationally intensive and require a great deal of access to and from memory."

"That is no joke in the world of compute; you don't get a whole lot better 100x in our world."

Although the comparison of a wafer-sized Cerebras part to a single Nvidia GPU is not quite apples to apples, Feldman said the comparison is still a meaningful demonstration of a speedup because there are benefits to a system of integrated cores and integrated bandwidth that exceed what many discrete parts can do when bundled together.

"When we are 100 times faster than one GPU, that doesn't mean you can do what we can do with 100 GPUs; it's probably closer to 1,000," said Feldman. "Because they don't scale linearly, and it takes more than twice the power" to double the number of discrete GPUs.

Also: Glaxo's biology research with novel Cerebras machine shows hardware may change how AI is done

Feldman points to a study last year by Tim Rogers and Mahmoud Khairy of Purdue University published by the Association for Computing Machinery. That study offers a deep dive into the details of different chip architectures for AI.

"I would be a bit careful in adverting 100 times, but it has that potential, definitely," said Saubestre.

Saubestre emphasized the benefit of the Cerebras machine as the group takes on larger and larger tasks.

The company is researching methods of carbon capture to try to reduce greenhouse gases in support of the COP26, the United Nations 2021 Climate Change Conference.

"The difference there is you're not looking at confinement on a ten-mile by ten-mile area, you're looking at basin-sized confinement where you're going to be storing the CO2, and you're looking at time simulations that do not last ten, twenty, thirty years but at storing CO2 for hundreds of years."

"When we start to look at these massive basin-scale type models, it's also an advantage for the Cerebras machines."

TotalEnergies is also researching materials, such as for high-performance batteries used in space, and designs for materials that would directly capture CO2 from the air and trap it.

"These are all the exciting projects we will be testing in the coming year with Andrew's team."

Also: Cerebras's first customer for giant AI chip is supercomputing hog U.S. Department of Energy

The work is noteworthy not just for the scale of problems but also for the use of what Cerebras calls its Cerebras Software Language; an SDK introduced last year that allows for more low-level control of the machine. The CSL allows users to "create custom kernels for their standalone applications or modify the kernel libraries provided for their unique use cases," as Cerebras explains. The TotalEnergies researchers worked with Cerebras to refine the CSL code to optimize workloads on the CS-2.

As for future purchases beyond the one CS-2 unit, Saubestre described TotalEnergies and Cerebras as fiancés who are on a courtship.

"There are a lot of partnerships where you're carpooling to go to the nightclub, and then there are long-term collaborations," said Saubestre. "We are fiancés for the time being, but we are trying to make this couple work."

"There's a lot that can be optimized, and there's a lot of potential, and that was the reason to go into this partnership with someone who is as keen as we are to make it happen," said Saubestre. "We're hoping to retain this competitive advantage that we've had over the years and inventing the future machines to run our simulations."

Added Saubestre, some vendors with whom TotalEnergies works focus on selling cloud services, but such services aren't necessarily ideal for the kinds of work the energy giant needs to get done.

"They're selling the cloud as if it was the end of it all, and right now, it's not as attractive as it seems," he said.