Cerebras’s first customer for giant AI chip is supercomputing hog U.S. Department of Energy

in May announcedCerebras Systems, the Los Altos, California startup that came out of stealth mode last month, revealing the world's largest computer chip, announced Tuesday morning its first customer, the U.S. Department of Energy.

The DoE's use of the Cerebras computers is taking place first at Argonne National Laboratory and at Lawrence Livermore National Laboratory, but is expected to spread to other DoE facilities, according to Cerebras.

Supercomputing contracts, sometimes referred to as "high-performance computing," have been a lucrative arena for chip makers to prove themselves by offering up their top-of-the-line parts for massively parallel systems. The DoE has been especially active as a customer, of late.

In March, Intel, in partnership with computer maker Cray, announced a $500 million deal with the DoE to deliver to the Department the first "exaFlop" computer by 2021, code-named "Aurora." And Advanced Micro Devices in May announced a $600 million system contract with Cray for the Department, nicknamed "Frontier," also to arrive in 2021. (Cray, which is getting bought by Hewlett-Packard Enterprise, announced a third supercomputing deal with the Department.)

Also: U.S. Department of Energy plans exaFlop supercomputer in 2021

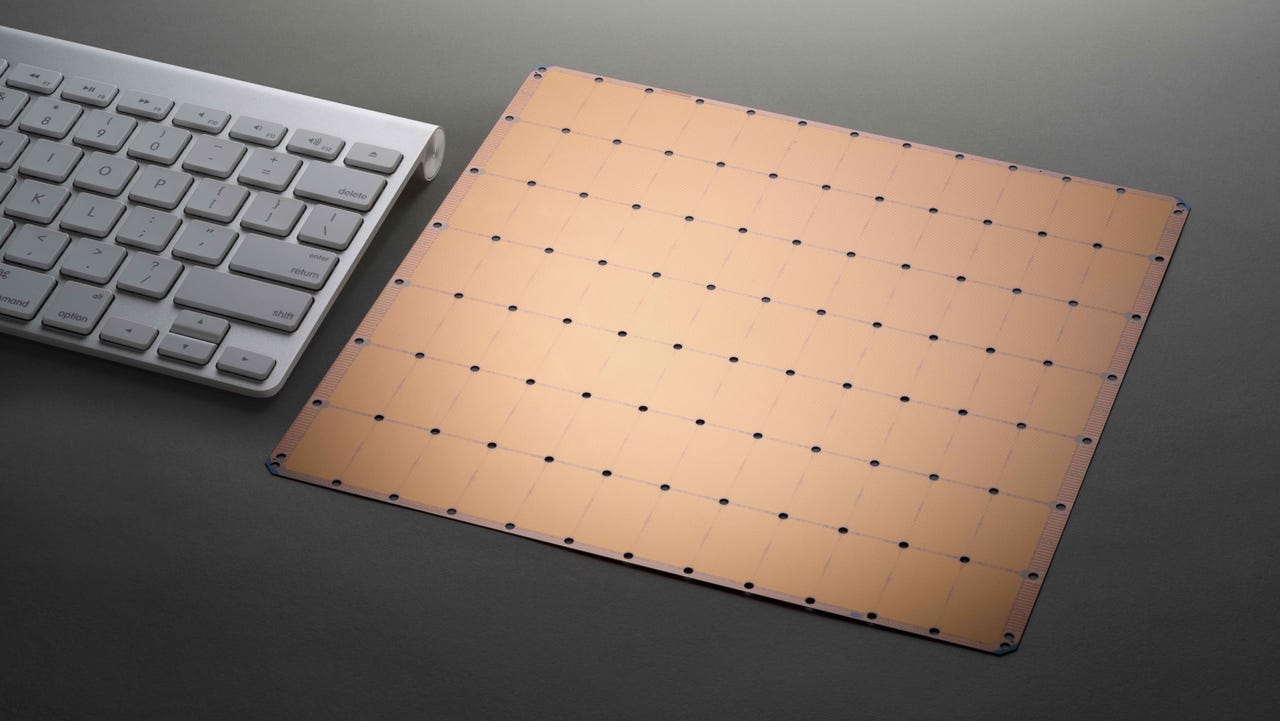

The Cerebras "wafer-scale engine," measuring 8.5 inches on a side, is the world's largest chip, and is dedicated to vector-matrix math prevalent in machine learning forms of artificial intelligence.

Unlike Intel and AMD, Cerebras will be selling its own complete system, a computer built around the "wafer-scale engine," the 46,225-square-millimeter chip cut from a single 12-inch wafer.

While details of the implementation were absent from the announcement, the implication is that Cerebras has a jump on the other systems, as those will not be in place for another two to three years, while Cerebras has said it is starting to ship its computers this month.

In an interview with ZDNet, co-founder and chief executive Andrew Feldman of Cerebras declined to say how much the contract was worth, except to say "it's good business for us." He said Cerebras will announce further details in the months to come.

Note that "SC19," a big supercomputing show, takes place November 17th through the 22nd, in Denver, and it's conceivable Cerebras could discuss details, and more contract wins, at that show.

Asked about the applications for which the system will be used, Feldman spoke in general terms.

Also: AMD, Cray to build 1.5 exaFlop Frontier supercomputer for Oak Ridge National Lab

"When you set out on a journey like we did, you want to gain purchase on big problems — that's what we get to do," said Feldman, referring to large-scale machine learning challenges such as new areas of cancer research, or treating traumatic brain injury.

Feldman studied at one point under economist Oliver Williamson, and has borrowed the term "gain purchase" from Williamson, he explained, an expression meaning to "gain a foothold" in something.

Rick Stevens, head of computing at Argonne, is quoted by Cerebras in its press materials as saying,

The opportunity to incorporate the largest and fastest AI chip ever—the Cerebras WSE—into our advanced computing infrastructure will enable us to dramatically accelerate our deep learning research in science, engineering and health. It will allow us to invent and test more algorithms, to more rapidly explore ideas, and to more quickly identify opportunities for scientific progress.

Asked about the competition in high-performance computing, Feldman acknowledge "the DoE is a large buyer for supercomputer infrastructure," and added that the largest of such systems are "now heterogenous, and combined of many different elements, including CPUs and GPUs." With the Cerebras announcement, he continued, "they are communicating they now have dedicated hardware for AI."

"They're working with Intel for the general-purpose compute, and they have had a big announcement through Cray that they are investing with AMD for GPU work, and now they are announcing with us work with our WSE."

"What you are seeing is. the department of energy and the supercomputer builders are laying out the architecture for the future of supercomputing," said Feldman.

Also: Cerebras has at least a three-year lead on competition with its giant AI chip, says top investor

Also: Cerebras CEO talks about the big implications for machine learning in company's big chip