Automating automation: a framework for developing and marketing deep learning models

Adopting AI is becoming increasingly important. Image: IBM

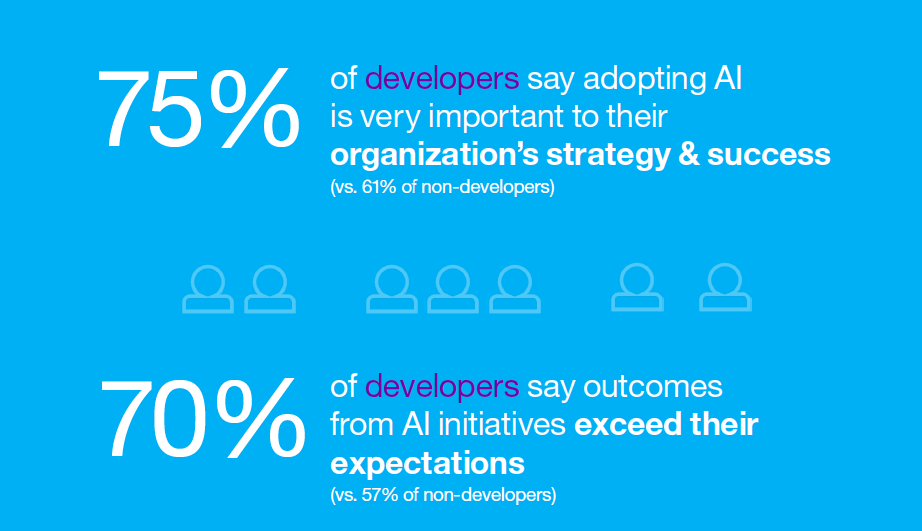

Deep learning (DL), machine learning (ML) and artificial intelligence (AI) are getting a lot of traction and for good reason, as we have been exploring. According to recent surveys, it seems that by now most developers and executives have realized the importance of AI and are at least experimenting with it.

There is one problem though: AI is hard. AI sits on the far end of a spectrum of data-driven analytics applications. So if data science skills are so hard to come by, AI skills are even harder. DimensionalMechanics just announced its approach to closing the AI skills gap, called NeoPulse AI Studio (NAIS). NAIS is part of NeoPulse Framework (NPF), which combines a number of interesting approaches and may show the way for others to follow.

Classifying models

Let's picture this simple scenario: since as we all know the internet is basically about cats, every organization would at some point want to develop an application to scan through images and figure out which ones contain cats for future use. That is actually an entry level classification task, something that DL excels at.

Entry level it may be, but easy it is not: you have to choose a DL framework to use,understand its inner workings and API, find or develop a model that is fit for the task, train it by feeding it sample data and then integrate it in your existing stack and deploy it. But then since at some point everyone would be able to do this, to stay one step ahead you'd have to also classify images of dogs. How would NPF help you there?

First, by letting you search for a model that does this for you in NeoPulse AI Store. Yes, that sounds analogous to an app store, except you will be able to shop for DL models instead of apps. Having an AI model marketplace sounds great, however in order to make any marketplace work a lot of things need to click.

Rajeev Dutt, DimensionalMechanics CEO and founder, says that the store will initially only host models built using NAIS in order to control their internal structure and have a standard way to represent them. The store will be bootstrapped with models developed by DimensionalMechanics, and rates charged will be based on model specifics - size, computational power required etc. The goal is to make the marketplace affordable and give developers a way to monetize their models quickly.

Dutt says that while DimensionalMechanics has custom models to do image classification, their focus is on customized models: "Out of the box, we have a classification model that can identify objects in videos. It can be used in two ways: on premise, in which case we would license out the actual AI model, or in the cloud, in which case they would call a cloud API. Our core value-add is in creating custom models".

So if you find a model that can identify both cats and dogs in the store, everything works in a snap. But what if you don't? You have two options. One, if you find a model that does something similar to what you want, you can re-train it. So if there is a model that can identify cats, you can re-train it to identify dogs too. Two, if you can't find a model that is close enough to what you want to do, you need to develop one from scratch..

Feeding models with data

Dutt says that "NAIS allows you to use existing image classification models and "fine tune" them so that you don't have to retrain the model from scratch. You can take a generic image classification model and retrain it to recognize dogs - assuming that the original model was built using NAIS. The only thing you need is training data".

Both these assumptions are actually kind of a big deal, but let's stick to data for now. Who provides the training data and does the training? What if I don't have training data, or it's not labelled?

DimensionalMechanics is out to pus artificial intelligence within reach for more organizations, and opening a number of fronts while at it. Image: DimensionalMechanics

The provision of training data (and labelling) must be done by the user. Dutt says that NAIS uses ways to reduce the amount of data needed to train - for example when you fine-tune an existing model, you don't need nearly as many images. He adds that NAIS leverages the latest in ML research to reduce data requirements, making it possible to use it even in some cases where the data is not labelled:

"For example, if you have a log of TCP/IP data, you can use this as raw data to train a model to recognize "normal behavior". Once the model has been trained, then the model can tell you if the traffic signature is considered normal or something has happened. Take a network intrusion event. If the user has no training data whatsoever then the user will have to use one of our "off-the-shelf" models".

Same goes for feature selection: "For many problems the neural network will automatically select features or reduce dimensions (ex. Image classification, audio analysis, etc.). In some cases, however, it is necessary for a human to step in. For many of the problems that NAIS is designed to solve, feature selection is handled by the neural network used".

And what if your training data cannot leave your premises, either because of policy restrictions or because it's just too big? Dutt says there is the option of running the model on-prem, but what kind of environment will that require to run in realistic timeframes?

"Typically the requirement is commodity hardware - a machine with a CUDA 8 compatible graphics card running on Linux Ubuntu. We have a range of hardware ranging from Nvidia GeForce 1080s (~$550) to high end K80s (~$3000). Most of our developers train on lower spec hardware. Our K80s are used for training very large models".

Letting models out in the wild

But what if despite all your efforts you still have not managed to find or retrain a model that does what you want? This is where NAIS comes into play - you will have to use it to build your own model. And learn a new language called NML (NeoPulse Modeling Language) while at it. But not to worry, says Dutt, the oracle is there to help you:

"NAIS uses something called "the oracle", which is an AI that knows how to construct AI models. NML is a language that we have designed to engineer AI models. NML exposes a keyword auto that instructs the compiler to consult the oracle to figure out an appropriate answer to a specific question. For example, auto can be used to figure out the right DL architecture.

In this case the oracle is consulted regarding what is the right architecture to use given the hints provided by the developer. The developer doesn't need to know anything about machine learning. It's as simple as x -> auto -> y where x is the input and y is the output. Auto figures out the right architecture to do the mapping.

The thing about the oracle is that it itself learns - over time and it gets better at its job. There is a theorem that says that DL networks are "universal approximators". Roughly, what this means is that if you have two variables that are related in some way that you don't understand, then there is guaranteed to be a neural network that can map one variable onto the other. As a result, by focusing on DL we can solve a very large set of problems".

NML was developed in-house by DimensionalMechanics and is "the only language that we know of that's designed for DL. In 30 lines of code, you can do what takes 600 lines of code using TensorFlow with python.

NeoPulse Modeling Language is a new language that is designed for deep learning, which DimensionalMetrics claims is "designed so that a high school student with a coding background can code it". Image: DimensionalMetrics

The key to NML is that it automates large chunks of AI model generation, which means that the developer doesn't have to do this. NML structures deep learning creation by using an elegant "block" approach that breaks down the problem into intuitive chunks, each of which can be fully automated".

Having an oracle by your side and cutting down on the amount of code needed sounds great, but since we're talking about something called AI Studio after all, the question on every developer's mind probably is whether this is actually an IDE:

"Currently, AI studio itself exposes a REST interface that allows easy integration with any integrated development environment but we are not focusing on a visual UI yet. It is largely command line driven. We may look at integration with commonly used IDEs such as Atom or Visual Studio in the near future" says Dutt.

And no, the reference to TensorFlow was not a coincidence. Dutt says that in the future DimensionalMechanics may open the door to other libraries or let the user choose their own library, but for the time being this is what NAIS uses under the hood.

In any case, after a model is built and trained, NAIS constructs a package with a thin wrapper to deploy the neural network. To access it you will need the NeoPulse Query Runtime (NPQR). NPQR allows people to interrogate the neural network using a simple REST interface that is automatically generated.

Dutt says that currently NPQR sits on a Linux based machine with a CUDA 8 compatible graphics card, and it is possible to access the NPQR via a web service call from any device (eg. Laptop, phone etc).

Cats and dogs

So what to make of all of this? Depending on how you look at it, you may say it's the way to the future or overly ambitious, and you'd probably be right either way.

On the one hand, automating automation can be beyond impressive - it can be very practical and offer huge boosts in productivity. Others like Viv, Alpine Data or BeyondCore have already been attempting it with quite some success. The difference is that DimensionalMechanics seems to target a broader audience.

"Where we differ is that we have an AI powering NAIS that knows how to build DL models and gets smarter over time. Using NAIS, we are not confined to any vertical industry - we can solve problems across the board - we are unbounded in terms of the ML problems we can address.

One other salient point is that with NAIS the models that the oracle generates are generally at or near "state-of-the-art" - so a developer with no ML skills can create models that rival those created by ML experts" says Dutt.

Developers with no previous Machine Learning experience being productive in 2 weeks time? That's what DimensionalMechanics promises. Image: DimensionalMechanics

Dutt also claims NML is very simple to learn as it is designed to be intuitive by breaking up the code into blocks that describe their use: architecture, source, input, output, train and run: "We have designed it so that a high school student with a coding background can code it. A developer with no AI expertise can easily build using NML. A business user probably won't be able to use it unless he/she can write scripts".

Clearly, a visual development environment would help there, so why would DimensionalMechanics dispense all this effort in building oracles and not do anything about this? One plausible answer could be the fact that in a space that is moving in leaps and bounds, there is only so much an 11-people startup can do to take on the Googles of the world. Can DimensionalMechanics get this out before Google or Microsoft, reportedly working on something similar?

Which brings up another interesting question: what is DimensionalMechanics trying to be? It is building on top of Google's TensorFlow, the leading DL framework at this point (in mindshare at least). So you could say it's trying to be something like what a number of IDE vendors for Android have been - a facilitator.

But it also looks like DimensionalMechanics is trying to beat Google to the punch by initiating a DL model marketplace, building on Google's own library. Does this make sense, and does DimensionalMechanics have the weight to make this work? And if this is its primary goal, why not go for a non Google-specific DL bridge library like Keras?

At the same time, DimensionalMechanics is also announcing a Series B funding round and a partnership with GrayMeta, which Dutt says involves providing AI services in many different forms and is exciting because GrayMeta has access to a rich and diverse set of global media customers. DimensionalMechanics and GrayMeta are already collaborating on a number of custom projects.

There sure is no lack of ambition or activity there. How many of those claims are proven in practice and how well those bets pay off remains to be seen, but whether it is DimensionalMechanics or someone else that makes it happen, it's the community that will stand to benefit.