Google's quantum computer inches nearer after landmark performance breakthrough

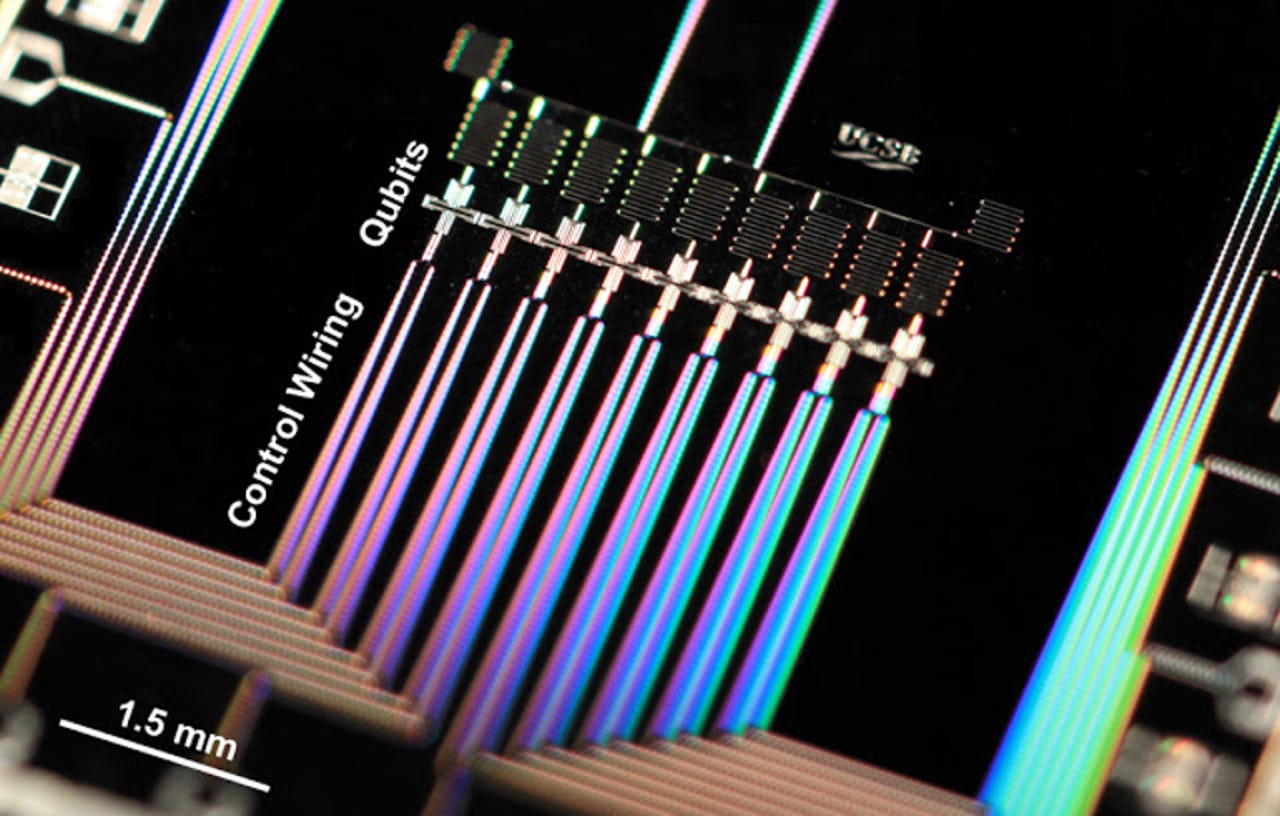

In this superconducting quantum chip, each of the nine cross-shaped qubits is connected to its neighbors and individually controlled.

Google engineers have found a way to make the company's D-Wave quantum computer more scalable and capable of solving problems in multiple fields.

According to Nature, Google has created a device that blends analog and digital approaches to deliver enough quantum bits, or qubits, to create a scalable, multi-purpose quantum computer, capable of solving chemistry and physics problems by, for example, simulating molecules at the quantum level.

The analog approach, or adiabatic quantum computing (AQC), underpins the D-Wave quantum computer Google bought a few years ago. But, as Nature notes, errors can't be corrected as systematically as they can on digital circuits. This limitation introduces a scalability problem, since random noise generated by AQC causes more errors as the system increases in size.

Google computer scientists and physicists at the University of California at Santa Barbara think the way to overcome this challenge is by combining analog and digital approaches to error correction.

They describe the method as "quantum annealing with a digital twist" in Digitized adiabatic quantum computing with a superconducting circuit, published online in Nature.

As opposed to the traditional ones and zeros used in digital computers to perform calculations, quantum computers use subatomic quantum bits, which can be simultaneously in multiple states and hence capable of carrying out more calculations in parallel.

According to Nature, Google's team used a row of nine solid-state qubits made from strips of aluminium that are placed on a sapphire surface.

"The researchers cool the aluminium to 0.02 degrees kelvin, turning the metal into a superconductor with no electrical resistance. Information can then be encoded into the qubits in their superconducting state," Nature writes.

"The interactions between neighboring qubits are controlled by logic gates that steer the qubits digitally into a state that encodes the solution to a problem."

Rami Barends and Alireza Shabani, quantum electronics engineers at Google, explain that controllability is the key, and have run experiments using nine qubits in conjunction with 1,000 logic gates.

"Qubits, like other physical objects in nature, have a resonance frequency, and can be addressed individually with short voltage and current pulses. In our architecture we can steer this frequency, much like you would tune a radio to a broadcast," they write.

"We can even tune one qubit to the frequency of another one. By moving qubit frequencies to or away from each other, interactions can be turned on or off. The exchange of quantum information resembles a relay race, where the baton can be handed down when the runners meet."

Applications of the technology include the ability to simulate molecules and materials, which could be valuable for chemistry.

Nature notes that Google's device is still a prototype, but that it could pave the way for devices with more than 40 qubits.

As Barends and Alireza Shaman note, with their error-correction method, they've removed the limit on scalability caused by noise.

"The crucial advantage for the future is that this digital implementation is fully compatible with known quantum-error correction technique, and can therefore be protected from the effects of noise. Otherwise, the noise will set a hard limit, as even the slightest amount can derail the state from following the fragile path to the solution.

"Since each quantum bit and interaction element can add noise to the system, some of the most important problems are well beyond reach, as they have many degrees of freedom and need a high connectivity. But with error correction, this approach becomes a general-purpose algorithm which can be scaled to an arbitrarily large quantum computer."

Read more on quantum computing

- Everyday quantum computing is years off - so why are some firms already doing quantum encryption?

- University of WA test drives quantum supercomputer

- University of Sydney receives quantum computing grant from US intelligence

- IBM Research hooks up quantum processor to cloud for test drive

- You can take IBM's quantum processor for test drive in the cloud

- Quantum cloud computing could arrive in the next decade, says Bill Gates