LidarPhone attack converts smart vacuums into microphones

A team of academics has detailed this week novel research that converted a smart vacuum cleaner into a microphone capable of recording nearby conversations.

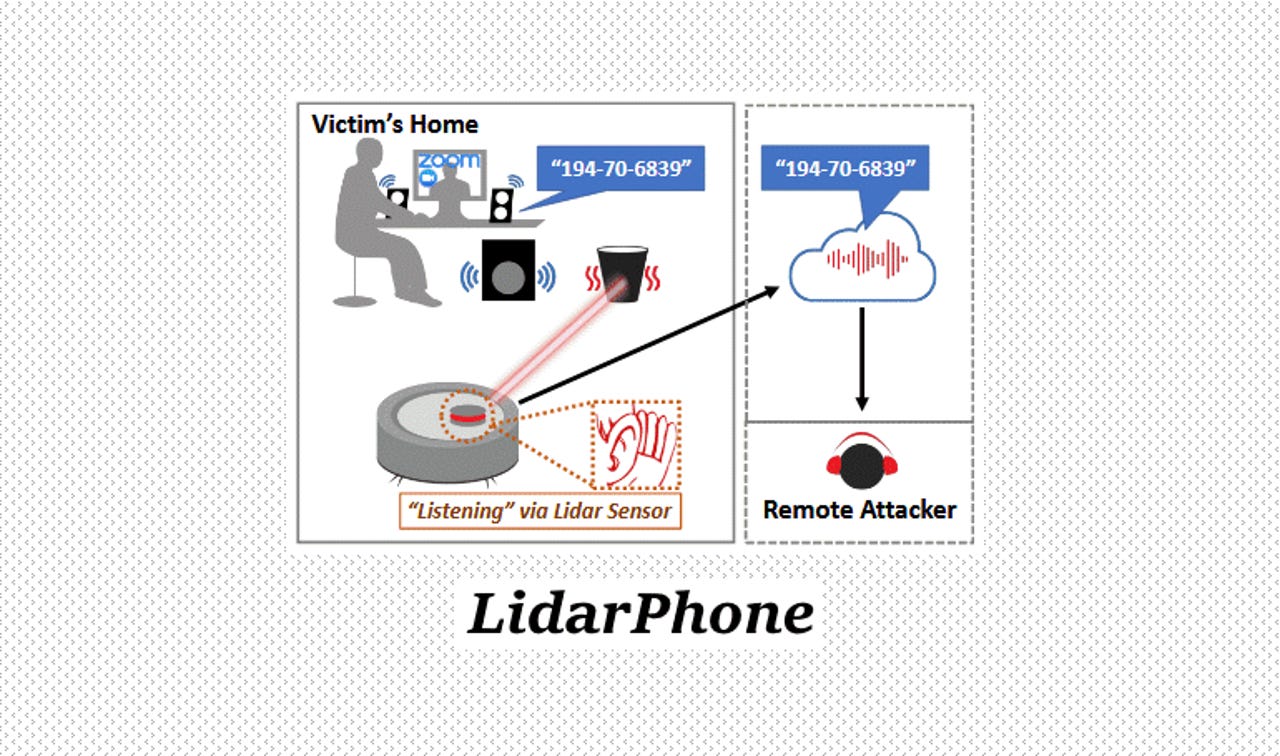

Named LidarPhone, the technique works by taking the vacuum's built-in LiDAR laser-based navigational component and converting it into a laser microphone.

Laser microphones are well-known surveillance tools that were used during the Cold War to record conversations from afar. Intelligence agents pointed lasers at far-away windows to monitor how glass vibrated and decoded the vibrations to decipher conversations taking place inside rooms.

Academics from the University of Maryland and the National University of Singapore took this same simple concept but applied it to a Xiaomi Roborock vacuum cleaning robot.

Certain conditions need to be met

A LidarPhone attack is not straightforward, and certain conditions need to be met. For starters, an attacker would need to use malware or a tainted update process to modify the vacuum's firmware in order to take control of the LiDAR component.

This is needed because vacuum LiDARs work by rotating at all times, a process that reduces the number of data points an attacker can collect.

Through tainted firmware, attackers would need to stop the vacuum LiDAR from rotating and instead have it focus on one nearby object at a time, from where it could record how its surface vibrates to sound waves.

In addition, because smart vacuum LiDAR components are nowhere near as accurate as surveillance-grade laser microphones, the researchers also said the collected laser readings would need to be uploaded to the attacker's remote server for further processing in order to boost the signal and get the sound quality to a state where it can be understood by a human observer.

Nonetheless, despite all these conditions, researchers said they were successful in recording and obtaining audio data from the test Xiaomi robot's LiDAR navigational component.

They tested the LidarPhone attack with various objects, by varying the distance between the robot and the object, and the distance between the sound origin and the object.

Tests focused on recovering numerical values, which the research team said they managed to recover with a 90% accuracy.

But academics said the technique could also be used to identify speakers based on gender or even determine their political orientation from the music played during news shows, captured by the vacuum's LiDAR.

No need to panic. Just academic research.

But while the LidarPhone attack sounds like a gross invasion of privacy, users need not panic for the time being. This type of attack revolves around many prerequisites that most attacks won't bother. There are far easier ways of spying on users than overwriting a vacuum's firmware to control its laser navigation system, such as tricking the user on installing malware on their phone.

The LidarPhone attack is merely novel academic research that can be used to bolster the security and design of future smart vacuum robots.

In fact, the research team's main recommended countermeasure for smart vacuum cleaning robot makers is to shut down the LiDAR component if it's not rotating.

Additional details about the research are available in a research paper titled "Spying with Your Robot Vacuum Cleaner: Eavesdropping via Lidar Sensors."

The paper is available for viewing in a PDF format here and here, and was presented at the ACM Conference on Embedded Networked Sensor Systems (SenSys 2020), yesterday, on November 18, 2020. A recording of the research team's talk is available below: