Opera is testing letting you download LLMs for local use, a first for a major browser

If you use Opera, you'll be happy to know that you can get access to a new feature today: LLMs that the browser can access entirely locally on your device.

For the first time, according to an Opera press release, a major browser lets users download LLMs for local use through a built-in feature. This means you can take advantage of LLMs in Opera without sending any data to a server. The feature is available in the developer stream of Opera One as a part of the company's AI Feature Drops Program, which lets early adopters test experimental AI features.

Available LLMs include Google's Gemma, Mistral AI's Mixtral, Meta's Llama, and Vicuna. There are approximately 50 families and 150 LLMs available in total.

Also: 5 reasons why Opera is my favorite browser (and you should check it out too)

Opera notes that each LLM can take 2GB to 10GB of storage. More specialized LLMs should require less. A local LLM can also be a good bit slower than a server-based one, Opera warns, depending on your hardware's computing capabilities.

The company recommends trying a few models, including Code Llama, which is an extension of Llama aimed at generating and discussing code, Mixtral, which is designed for a wide range of natural language processing tasks like text generation and question answering, and Microsoft Research's Phi-2, a language model that demonstrates "outstanding reasoning and language understanding capabilities."

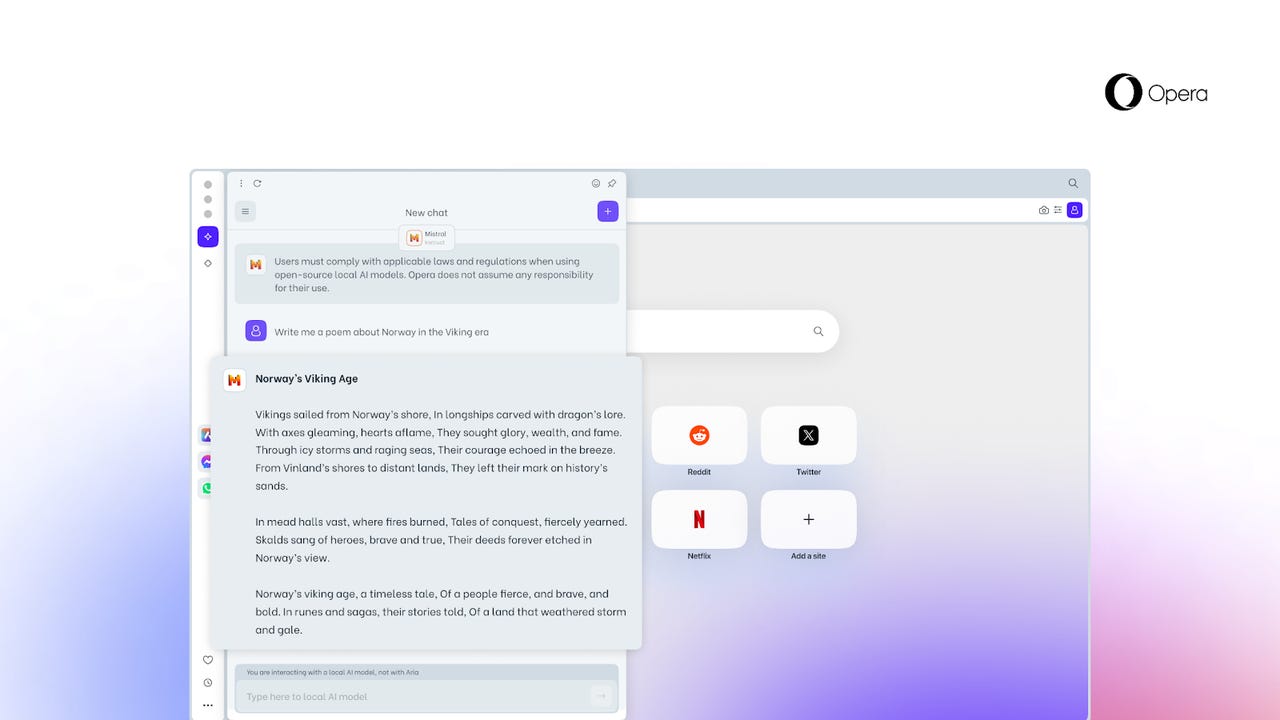

To test this feature, you'll need to upgrade to the latest version of Opera Developer. Once that's done, open the Aria Chat side panel and select "choose local mode" from the drop down box at the top. From there, click "Go to settings" and search for the models you want to download. When the LLM you chose is downloaded, click the menu button on the top left to start a new chat. You can then choose the model you just downloaded and start chatting.

The LLM you choose will replace Opera's native AI until you switch back to Aria.