Planet analytics: big data, sustainability, and environmental impact

Data-driven analytics applications are eating the world and transforming every domain. But the world is also being eaten up in a different way by several non-sustainable practices.

On Earth Day, we look at what we know about the relation between big data and the environment: how big data is used to measure sustainability and inform action, and what is the impact they have on the environment as a whole.

Data-driven sustainability

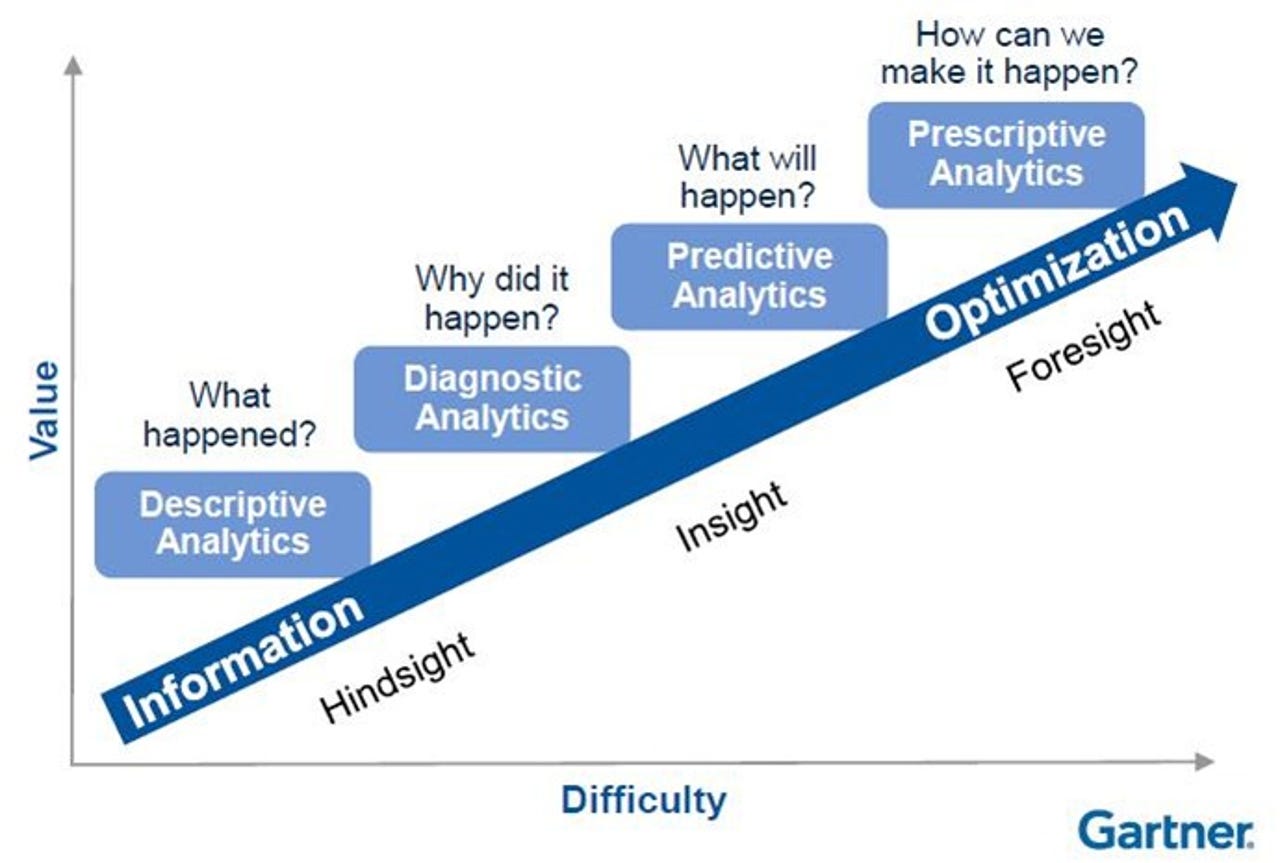

Analytics applications range from capturing data to derive insights on what has happened and why it happened (descriptive and diagnostic analytics), to predicting what will happen and prescribing how to make desirable outcomes happen (predictive and prescriptive analytics).

Gartner's analytics maturity model. (Image: Gartner)

Each organization is on a different point along this continuum, reflecting a number of factors such as awareness, technical ability and infrastructure, innovation capacity, governance, culture and resource availability.

While businesses vary in each and every one of these factors, they typically have one thing in common: they have a specific domain they operate in, as well as business and governance models with clearly defined stakeholders and responsibilities.

But things are different when it comes to sustainability. There is no business model for sustainability per se, rather this is an externality for pretty much every business model. Although businesses are affected by factors such as environmental quality, and in turn their actions can also affect the environment, most business models fail to capture this interplay.

Hence the burden of measuring and promoting sustainability falls on the shoulders of governments, non-governmental and inter-governmental organizations. And this can by and large account for the gap we observe in analytics applications for sustainability.

Compared to businesses, these organizations are typically at disadvantage in every possible way. Case in point: the Sustainable Development Goals (SDGs). SDGs, officially known as "Transforming our world: the 2030 Agenda for Sustainable Development" comprise a set of 17 "Global Goals".

SDGs are spearheaded by the United Nations through a deliberative process involving its 193 Member States, as well as global civil society. So how does progress towards goals broad and ambitious such as "No Poverty", "Sustainable Cities and Communities" and "Climate Action" gets measured and evaluated?

Everything counts in large amounts

Briefly - with great difficulty, if at all. SDGs are broken down to indicators such as "Percentage of urban solid waste regularly collected" or "CO2 emission per unit of value added". The difficulty is due to a few factors.

First, these metrics need to have solid and clear definitions that can be shared and agreed upon among UN members. There is work in progress in the UN to develop a global indicator framework for the SDGs.

The aim of the UN Global Pulse initiative is to use big data to promote SDGs. (Image: UN)

Part of this work is dedicated towards building an SDG ontology to help formalize, share and integrate indicator definitions. Ontologies are formal data models that can greatly facilitate data definition and integration efforts, and the SDGIO project is working towards this goal by integrating relevant work in the field.

But even if metrics are defined and shared, they need to be populated with adequate reliable data to be useful. Relying on surveys is problematic, so the UN is leading efforts to coordinate stakeholders such as national statistics offices to provide concrete examples of the potential use of Big Data for monitoring SDGs indicators.

The UN has also assigned the Global Pulse innovation initiative to work specifically on applications that contribute towards achieving the SDGs. Global Pulse recently presented its work, most notably some prototype applications to collect data from sources such as satellite imagery and radio broadcasts.

The issues the UN has to deal with are huge and complex. Although these initiatives could signify a turn towards an effort to proactively collect data, rather than expect data to be handed over, there is still a long way to go.

The techniques used may be advanced in some cases, but the UN is still at the bottom of the big data pyramid of needs: trying to get data access. So how far along the analytics continuum are we in terms of planet analytics? The vision may be there, but in practical terms we have not even gotten to first base, as UN is trying to get descriptive analytics to work.

Some are trying to get the basics right, while some are after up in the sky goals. Yet, there's a place for everyone under Big Data. (Image: Martin Kleppmann)

Big Data versus sustainability

But there are also a couple of broader issues at play here: authority and impact.

One of the SDGs, SDG 11, is about Sustainable Cities and Communities. While the UN is working on it, Arcadis derived a methodology combining metrics in the areas of People, Planet and Profits to produce the Sustainable Cities Index, analyzing and ranking 100 cities in the world.

This notable initiative was carried out by a private enterprise, using a methodology glossed over in a 2-page annex and data sources including Siemens and TomTom. It's proprietary and opaque, but it's also out there and ready to use now.

What about CO2 emissions? While the Paris agreement is under both negotiation and criticism, a few things are worth noting there. At this time, even for administrations officially committed to supporting the agreement such as the EU, CO2 emissions measurement is opaque and inexact.

To begin with, actual measurements of emissions are only practical in facilities such as power plants. For other energy-intensive industry sectors obliged to participate in the EU Emissions Trading System, CO2 emissions are indirectly calculated and reported by 3rd parties.

No big data, sensors, internet of things or analytics on the edge there. The asymmetry in applications and priorities is striking.

Utilities may be individually applying big data analytics for marketing and customer retention or to help customers get an overview of their consumption patterns and optimize them. However, common data models and integration of utilities and independent renewable power producers in smart power grids is still not operational.

Manufacturers and transport operators may be individually applying big data analytics to optimize engine operation and carrier routing, resulting in cuts in fuel costs and carbon emissions. However the overall cost of applying big data analytics remains elusive.

Douglas Rushkoff argued that the best smartphone is the one you already own. While big data is not consumer tech, the gist of his arguments is still valid for server farms running big data applications.

Featured

Longevity is a virtue, and replacing servers every couple of years makes no sense environmentally or economically. Raw material sourcing and recycling are far from being perfect, so for the time being the best bet for the big data industry is to try and make the most of existing machines.

This is part of the reason why scaling out using commodity machines, rather than up using bigger machines, is seeing increasing adoption. Prolonging server lives as much as possible and making the most of processing and compute power available is something technologies such as NoSQL databases and Hadoop are enabling.

The storage and processing power required for big data applications means that there is a cost associated with each data point and each calculation. For organizations with massive data centers, this is not something to be taken lightly.

More efficient data centers are a priority for such organizations, and the move towards open sourcing data center design and using cloud services and cleaner energy may mean that others may also be able to benefit from such economies of scale.

So, what is the net effect of applying analytics to optimize operations? Could improvements in efficiency gained through analytics be offset by the hidden cost in material, power and emissions? Is there a point after which optimization does not make sense anymore? Does the staggering pace of innovation require more resources than it makes available?

Obviously, these are very complex questions to answer. Not so much because we lack the capacity or the data, but mostly because to do this we would have to make it a priority and start seeing the big picture.

So far, this has not been really happening, but one can always hope we get to it before it's too late.