Qualcomm President Amon intends to win in cloud where company failed in past

"Were you surprised we went into the cloud?" asks Qualcomm president Cristiano Amon during a chat by video conference.

Amon was referring to how Qualcomm, a giant in mobile chips, is now hoping to make it big in machine learning "inference" for data centers.

Surprising indeed. Qualcomm is not a presence in the data center. It entered that market in 2014 with lots of gusto, only to back out last year. When Amon became president, in December of 2017, his team took a look at the enormous cost to compete with the server CPU king, Intel, and how little it had produced in actual shipments for Qualcomm.

"We said, This doesn't make any sense," Amon reflects. "It was too difficult to focus on the CPU in the cloud space."

Instead, what the company will start sampling later this year is a dedicated processor for server computers that doesn't run the operating system or the main server applications. Instead, it is just for performing predictions, the inference part of AI.

The "AI 100," as the family of chips is called, announced Tuesday at an event in San Diego, will go into data centers at the edge of cloud computing, where they will coordinate with client devices, such as smartphones but also cloud-connected IoT devices, that need help performing the matrix multiplication that produces predictions based on trained AI models.

Also: CES 2019: Qualcomm President Amon is convinced you're going to be thrilled with 5G

That market today is dominated by Intel, whose CPUs in servers perform the vast majority of inference. Intel is hoping to leverage that dominance in AI inference to take share in the "training" part of AI, where Nvidia has been dominant, even as Nvidia looks to take market share from Intel in inference. Everything, in other words, seems up for grabs, so why not Qualcomm?

Qualcomm sees an opportunity where those edge servers have to be extremely energy efficient. The company sells hundreds of millions of chips per year that have to operate under such energy constraints in mobile. Why not extend that energy efficiency to servers that are not burdened by running legacy applications, the company figured.

"So we just vectored the entire effort on edge," is how Amon sums up the company's pivot.

The AI 100, says Qualcomm, will offer more than ten times the performance per watt of energy as today's CPUs and GPUs for inference.

Also: Intel's AI chief sees opportunity for 'massive' share gains

Microsoft is a prominent customer quoted by the company in support of the effort. However, Amon cites as the most recent relevant data point the announcement by Google last month of its "Stadia" gaming service.

"This is the perfect use case," says Amon. "The cloud is the new console," meaning, gaming console, he avers. Energy-efficient inference performed in edge servers can give more brawn to mobile devices than they would have on their own. Because there must be low latency in applications such as gaming, "you are going to have to go closer to the device and go outside of the traditional large data center building."

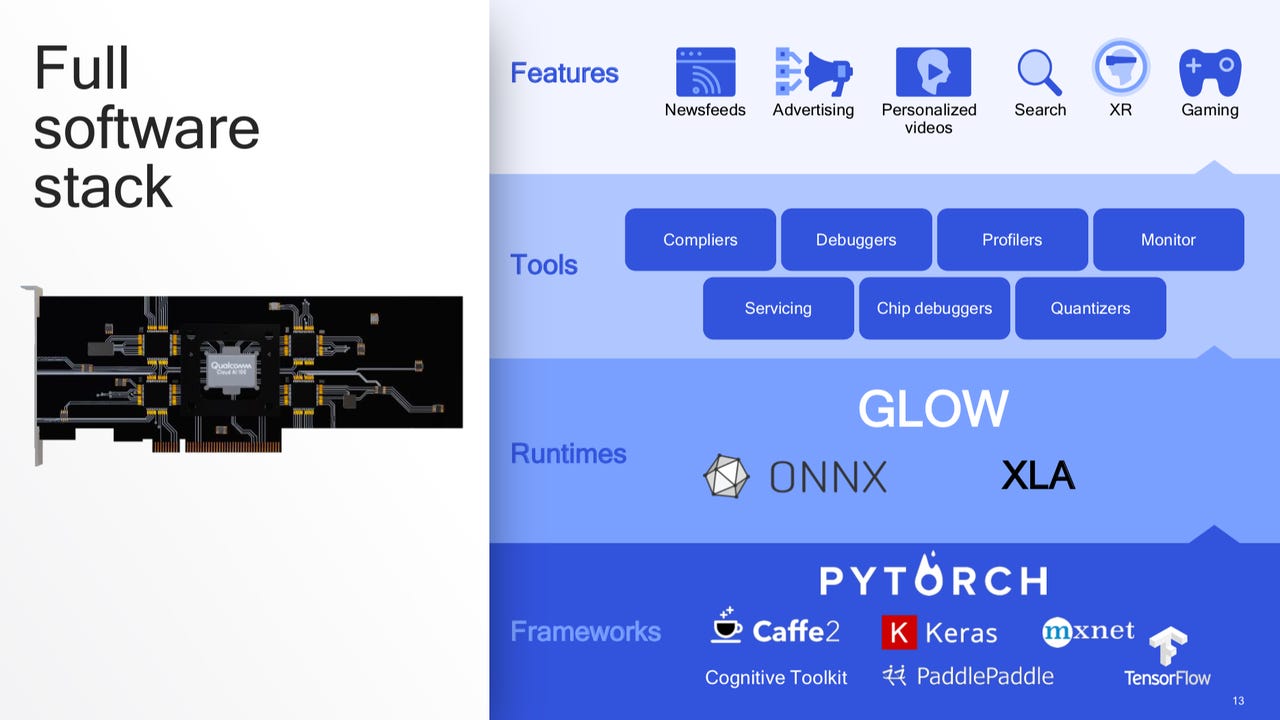

Qualcomm likes the fact that AI inference is a green field market, focused on support for AI frameworks such as TensorFlow, rather than legacy software that data center CPUs must support.

But why should Qualcomm be successful where Intel dominates and where Nvidia has, presumably, tremendous leverage?

Qualcomm, says Amon, is taking its "Neural Processing Unit," or NPU, the company's dedicated AI processing logic inside its Snapdragon mobile processors, and "scaling it to the cloud." The technical details are absent at this point; Qualcomm plans an event later this year at which it will describe the technical details of AI 100 and also the relevant benchmark specifications for its performance.

The NPU is a combination of a graphics processing unit, or GPU, and a digital signal processor, or DSP, observes Amon. Amon didn't go into detail in this chat, but what is commonly known about the NPU is that it combines Qualcomm's "Hexagon" DSP with its "Adreno" GPU. A neural network model loaded onto the client devices runs some combinations of floating-point and integer operations across DSP, GPU and the "Kryo" CPU in Snapdragon, according to the abilities of each of those three functional blocks.

That combination of functional blocks struck a balance between performance and energy efficiency in the mobile version, and was a kind of initial blueprint for AI 100, says Amon.

"The architecture [of AI 100] is 100% based on how we design NPUs for Snapdragon mobile," he says. "This gave us a particular design-point advantage, we just copied and pasted it."

Qualcomm president Cristiano Amon scrapped the company's effort to compete with Intel CPUs in the data center. With a more precise focus on the role of machine learning "inference," he is now betting his company can take share against Intel and Nvidia in cloud computing where they failed before.

But again, why is Qualcomm relevant, if they haven't been in cloud? "We have to respect the incumbent position in cloud," concedes Amon. "We only thought there would be an opportunity for something in cloud if it is close to what we do, mobile."

Qualcomm's foray into machine learning circuits is roughly twelve years old, a still young effort, relative to the company's long history in mobile processors. But it is "getting a lot of attention and resources" inside of Qualcomm, he says. The company has established the usefulness of its NPU in mobile devices for things such as processing images in camera applications. "The majority of the use cases for edge computing for AI are what has been done with the camera," things such as turning night-time photographs into pictures as clear as day. Another use case is security, for things such as processing credit card transactions on a mobile device, he says.

Qualcomm's dominance in smartphones and in the connections for automobiles means that the company's center of gravity, says Amon, will always be with mobile.

Must read

- What is AI? Everything you need to know

- What is deep learning? Everything you need to know

- What is machine learning? Everything you need to know

- What is cloud computing? Everything you need to know

Featured

One of the things that gives him confidence about this new adventure is the way that 5G wireless networks are being built out, a factor Amon first discussed with ZDNet during my meeting with him at the Consumer Electronics Show in January.

"Think about use in baseband and RF at the edge, for a 5G network," says Amon. A lot of edge servers performing inference may in fact be built as a part of the radio network, or "RAN," he contends. "This is a stealth thing, but over time you will see us do more on the network side, on the radio side, in infrastructure." What he means is that the company's wireless expertise in WiFi networks will carry over into 5G infrastructure as 5G comes to resemble more wireless LANs, rather than wide-area RANs.

"Look at the newer operators, in Japan, for instance, where you have Rakuten, or in India, where you have Reliance."

"What I think is going to happen is, the solution for enterprise private 5G networks will be virtualized, and more like Wifi rather than RAN," says Amon.

The devil is in the details, they say, and so the world will wait to hear later this year whether Qualcomm can deliver the images-per-watt and those sorts of benchmark specs that make it a winner.

"It will be very interesting to see how everyone reacts" said Amon of his company's return to servers.

What do you think? Can Qualcomm be a winner in its brand new market for edge inference chips? Tell me what you think in the comments section.