Tableau announces 'smart' features for 2017, but will it be enough to fend off the competition?

Tableau Software just replaced its CEO after a few rocky quarters on Wall Street, but that didn't seem to faze the enthusiastic crowd of 13,000-plus customers gathered at last week's Tableau Conference in Austin, Texas.

Customers shared their love for the software, whooping and clapping about cool new features demoed during the popular "Devs on Stage" keynote. They also oohed and aahed their way through the opening keynote, as Tableau previewed a new data-engine and data-governance capabilities as well as compelling natural-language-query and machine-learning-based recommendation features.

Why the change in leadership given customer satisfaction levels and continued double-digit growth? More on that later, but first a quick synopsis of the coming attractions.

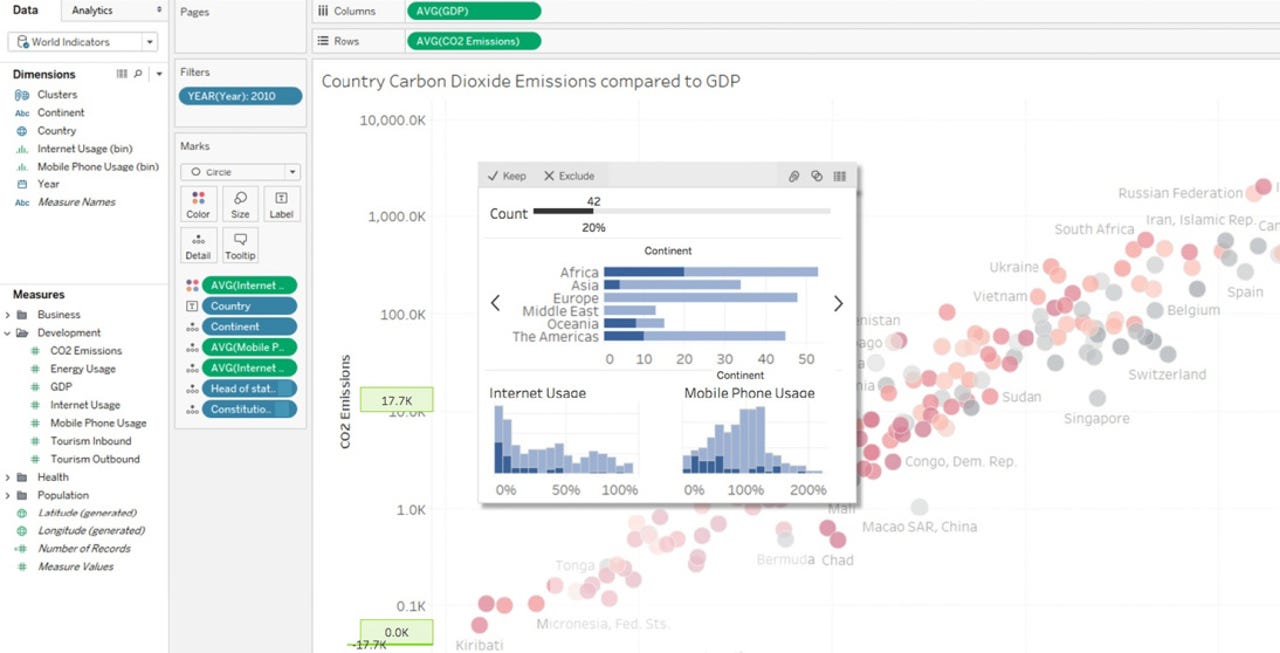

Hover-over insights, shown inset in blue, will bring instant analysis to Tableau that goes beyond showing the details when you mouse over a data point.

Coming in 2017

Tableau broke its coming attractions into five categories: Visual Analytics, Data Engine, Data Management, Cloud and Collaboration. Here's a closer look at what's in store and a rough idea of what to expect when.

Visual Analytics: Tableau highlighted a bunch of visual data-analysis upgrades starting with instant, hover-over insights that go beyond just showing a data point when you mouse over a point in a chart. In the carbon dioxide emissions vs. GDP visualization pictured above, for example, the hover-over insight inset in blue shows that most of the countries in a lassoed set of plots selected from the chart are in Africa. Also presented are related insights into Internet and mobile phone usage. The software will generate these drillable insights automatically as the user hovers over a data point.

Tableau is also beefing up spatial and time-series analysis capabilities, adding the ability to layer multiple data sets against shared dimensions such as location. For example, you might want county, municipal and zip code views of the same geographic areas. Look for these features to show up in the first half of next year.

Further out on the horizon (in the second half of 2017) Tableau expects to introduce natural language query capabilities. Aided by semantic and syntactic language understanding, this feature will enable users to type questions such as "show me the most expensive houses near Lake Union" (see image below). In this case "expensive" and "near" are relative terms, so the tool will offer a best-guess visualization will slider adjustments for "last sales price" and "within X miles of Lake Union" so user can fine-tune the analysis.

MyPOV: Tableau's differentiation compared to simplistic data-visualization tools is supporting a flow of visual exploration, correlation and analysis. The new hover-over insights and layered details will make Tableau visualizations that much more powerful, enabling developers to create fewer visual reports that can be support myriad analyses.

Natural language query capabilities expected in late 2017 will simplify data exploration for novice and experience analysts alike.

Hyper Data Engine: Acquired in March, the Hyper Data Engine promises faster analysis and data loading and higher concurrency, supporting "up to tens of thousands of users" on a single shared server. Data loads that used to run overnight will take seconds with Hyper, says Tableau. Last week the company demonstrated ingestion of 400,000 rows of weather data per second with simultaneous analysis and data refreshes.

Hyper will replace the existing Tableau Data Engine (TDE), starting with the company's Tableau Online service by the end of this year. Hyper is expected to become generally available in a software in the second half of 2017. Migration of TDE files will be seamless and the new engine will run on existing hardware, Tableau reports.

MyPOV: If Hyper lives up to its billing it will eliminate performance constraints that many Tableau customers endure when dealing with high data volumes, simultaneous loading and analysis, and high numbers of users. The proof will be in the pudding, but Tableau is confident that Hyper's columnar and in-memory performance will ensure stream-of-thought analysis without query delays. In fact, they expect Hyper to eventually serve as a stand-alone database option as well as a built-in data engine.

Data Management: As Tableau has grown up from a departmental solution into an enterprise standard, the company has had to address the needs and expectations of IT. To address data governance, for example, it's introducing (likely in the first half of 2017) a new Data Sources page and capability for data owners/stewards to certify data sources. A green "Certified" symbol (see image below) will show up wherever that data set is used to show that it has been vetted, that security rules are in force and that related joins and calculations are valid. More importantly, when users add their own data or otherwise depart from the certified data, visual cues will show that the calculations are derived from non-certified data.

To support data governance, Tableau will introduce a data-certification capability that will show when measures are and are not based on vetted data and calculations.

Diving deeper into data management, Tableau is working on "Project Maestro," which will yield a self-service data-prep and data-quality module likely to show up in the second half of next year. This optional, stand-alone module will deliver drag-and-drop-style functionality aimed at the same data owner/steward types who are likely to certify data sets. The idea is to deliver the basics of data-prep and data cleansing required for simple use cases. Tableau customers are likely to continue to rely on software from partners such as Alteryx and Trifacta to handle complex, multi-source and multi-delivery-point deta-prep and data-cleansing workflows.

MyPOV: Tableau has previously supported the concept of certified sources, but this upgrade is supported by collaborative capabilities (see section below) that will enable new calculations and dimensions to be suggested, reviewed and added to a certified set. Governance capabilities must be agile or users will quickly work around trusted-but-stagnant data sources. On the data-prep front, Maestro looks like it will deliver the 20 percent of functionality that gets most of the use. We'll see whether it can address 80% of data-prep needs and how it stacks up pricewise versus third-party tools.

Cloud: Tableau addresses what it sees as a hybrid future with Tableau Online, its multi-tenant cloud service, coupled with cloud-hosted and on-premises deployments. Likewise, Tableau expects to see a mix of cloud and on-premises data sources. Tableau currently relies on pushing extracts out from on-premises sources, but in the first half of 2017 it expects to introduce a Live Query Agent capability that will securely tunnel through firewalls for direct access to on-premises sources.

On the cloud side, Tableau has connectors for popular SaaS applications such as Salesforce, but you can soon expect to see additional connectors for Eloqua, Anaplan, Google AdWords, ServiceNow. On the horizon are connectors for cloud drives such as Box and DropBox.

In a separate development expected in 2017, Tableau will port its Server software to run on multiple distributions of Linux. This move is important for cloud-based deployments because Linux dominates in the cloud and costs as little as half as much as comparable Windows server capacity. Tableau itself will be the first to take advantage by soon porting the Tableau Online service to run on Linux.

MyPOV: Tableau has a head start on cloud compared to its closest rival, Qlik, and I particularly like its embrace of the tools and capabilities of public cloud providers. For example, Tableau is encouraging the use of Amazon RDS for PostGreSQL, ELB for load balancing, S3 for backups and Amazon CloudWatch for load monitoring. And when natural language querying arrives, Tableau says it's likely to take advantage of Alexa and Cortana voice-to-text services to support mobile interaction.

Collaboration: Tableau is adding a built-in collaboration platform to its software to facilitate discussion. The platform will enable users to exchange text messages directly with data stewards and other users to answer questions such as, "is this the right data for my analysis?" Bleeding into the new data-governance capabilities, you'll also be able to see what data is used where and ask whether a new dimension or calculation can be added to a certified data.

Delivering on a longstanding user request, Tableau is adding data-driven alerting to its software. In another upgrade that will personalize the software, Tableau is adding a Metrics feature that will let users save their favorite stats and capsule visualizations so they can review them, say, each morning on their desktop or on mobile devices.

Tableau says its software will get smarter with the introduction of machine-learning-based Recommendations. The ambitions is to go beyond the "show me" visualization suggestions currently available to auto suggest data based on the user's historical behavior, similar users' behavior, group membership, data certifications, user permissions, recent item popularity, and the context of a user's current selections. Don't expect to see that functionality until the second half of 2017.

MyPOV: Some of this stuff seems obvious and overdue. Collaboration, for example, shows up in lots of software, and it particularly helpful for discussing data and analyses. Alerting within Tableau has heretofore been addressed by custom coding and third-party add-on products, but it's a long-overdue, basic capability that should be built into the software. Smart, machine-learning-based recommendations are more cutting edge, but with the likes of IBM (with Watson Analytics) and Salesforce (with BeyondCore) already offering such features, Tableau may be in good company by the time it rolls out its own Recommendations feature.

Why the Leadership Change?

Over the last couple of years, Tableau has been stepping up into more big enterprise deals. It's also facing more competition, including from the likes of cloud giants Microsoft (with PowerBI) and Amazon (which will soon release QuickSight). At the same time Tableau is moving into more cloud deployments and subscription-based selling (whether on-premises or in the cloud). These transitions have contributed to revenue and earnings surprises in recent quarters, and that's something Wall Street never likes.

In August, Tableau tapped Adam Selipsky, previously head of marketing, sales and support at Amazon Web Services, to "take the company to the next level," as former CEO, and now chairman, Christian Chabot put it in a press release. That's just what Selipsky did at Amazon, helping AWS to evolve from departmental and developer-oriented selling to big corporate deals. It's Selipsky's challenge to keep Tableau growing and profitable even as it pushes into bigger deployments and increasingly cloud- and subscription-based deals.

MyPOV: Now that it's in enterprise-wide deals, Tableau is facing more competition and deal-delaying offers of "free" software thrown in with big stack deals. Tableau has consistently won the hearts and minds of the data-analyst set, but on this bigger stage it must also address the needs of data consumers that might not be data savvy enough for the company's usual interface. The Governance, Alerting, Metrics, Natural Language Query and Recommendations announcements -- as well as new APIs for embedding into applications -- are all moves in the right direction. Nonetheless, I won't be surprised to see a lighter user interface and lower-cost-per-seat options that will round out Tableau as a platform for enterprise-wide deployments.

Related Reading:

Qlik Gets Leaner, Meaner, Cloudier