The final word on the 'megapixel myth'

There's been a lot of talk of the "megapixel myth" lately all started by David Pogue of the New York times declaring that megapixels are a "big fat lie". Fellow blogger David Berlind also did an entire series of blogs with his own sets of tests declaring "there's a good chance you'll never need more than 3 or 4 megapixels from your camera". I read the articles and blogs and I took a look at the methodologies described and I found some serious problems that affected the conclusions. Here's a list of issues and I'll explain each one. [Update 11:00 PM - Pogue responded in the talkback and I responded to him.]

- Computer down-sampling was used to render lower resolution images

- Lack of adequate test patterns for resolution testing

- Lossy compression JPEGs were used which adds artifacts

- Lens and motion factors not accounted for

- Not all image sensors are created equal

- Megapixels are a unit of area and not resolution

- Actual resolutions needed for printing

1: The first MAJOR issue is the use of computer down-sampling (or down-sizing) to generate lower resolution samples. Someone pointed that out to Pogue that it may invalidate the results but Pogue ignored it with the simple comment "I'm not entirely convinced". Now David Pogue is attempting to conduct a "scientific" experiment in order to prove his hypothesis that the digital camera industry is pushing the "big fat lie" of more megapixel yet Pogue sees no problem synthesizing the data and violating fundamental science principals. Now I understand Pogue is no scientist, but he reaches a massive audience and he has the responsibility to be accurate when he's making such bold and damning assertions about an industry. I'm going to explain why this completely invalidates his experiments.

Whenever you down-sample a computer image especially when you use a high quality algorithm provided by something like Photoshop, you are guaranteed to get improved image quality because all the noise, lack of focus, and slight motion blur in the image is averaged out and you're left with the purest of images that maximizes the effectiveness of every single pixel. If you took a 4500x3000 (13.5 megapixel) image from a high-end camera and down-sampled it to 2250x1500 ( 3.375 megapixel) image, I can guarantee you that the resulting 3.375 megapixel image will be vastly superior to any image captured from a native 4 megapixel image. Pogue simply assumes that a down-sampled image is the same as a lower resolution image captured with fewer megapixels. Not only is it an assumption, it's a really bad assumption and anyone who does the experiment or works with digital images a lot will know that the native 4 megapixel camera will invariably have noise and imperfections in the image. The down-sampled 3.375 megapixel image on the other hand will have far fewer imperfections because the noise happens at the 13.5 megapixel level and it's mostly averaged out to produce a very clean image at 3.375 megapixels. [Updated 11:00 PM - Pogue did capitulate and allowed a professional photographer to use cropping instead of down-sampling to come up with lower megapixels.]

[Next page - The concept of optical resolution]

The concept of optical resolution

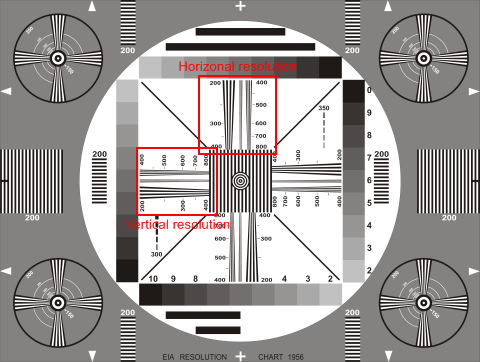

2: The other big issue is the lack of an adequate test pattern. You can't just shoot some random object and eyeball it and say "yup I this one's better than the other" because it's totally unscientific and inaccurate. Any real resolution test will use a standardized pattern for an optical resolution test. The EIA 1956 video resolution target for example is used to test television systems but you can use 5 of them side by side to get 5 times the resolution which is enough to test digital cameras. Not using a standardized pattern for resolution testing is like going to an eye doctor that he holds up his fingers and asks you how many fingers you see to figure out if your eye sight is good or bad. Would you actually trust that optometrist?

You can purchase official EIA test patterns but they're not cheap especially if you need 5 of them for digital camera testing. Note that you can buy a real one and use four blank sheets of paper of equal size as space fillers. I actually recreated a high-quality vector based pattern in Corel Draw 7 back in late 1999 and posted it on the Internet for anyone to download and print. I've reposted it here for you to download but be aware that it's only in Corel 7 and Illustrator 8 format so you'll need something that can import either of these files. I tried an SVG format but the plug-in did a horrible job on the print preview and a conversion to Visio and Word was equally disappointing. Note that this can't be used for official testing but it's pretty darn close if you printed this on the brightest laser paper at 1200 DPI laser. Here is what the pattern looks like below.

To calculate digital camera resolution, you need to have 5 of these sheets plastered end to end and you need to fill your entire camera frame with it. Note that most SLRs only give you 85% of the image so you'll have to play with it until you get all 5 sheets filling the full frame. You don't need to actually use 5x5 sheets, 5 sheets from top to bottom will suffice and we just need to fill it perfectly from top to bottom. Then all you need to do is look at the two sections I highlighted in red and see where the wedges blur to the point of being indistinguishable. Since a single EIA chart filling full frame tests 200 to 800 lines of resolution, 5 of these sheets allow you to test a digital camera between 1000 to 4000 lines of resolution. I did some sloppy measurements for illustration purposes but the official methodology is to zoom in and use the eyedropper tool to see where the color is at the halfway grey point between the lightest and darkest sections. If the darkest part has a value of 200 and the lightest has a value of 100, then the point at which the gap hits 150 is the midpoint where the wedge is no longer distinguishable and that's the optical resolution limit of the camera used to capture the image. Also note that I should have used a tripod, much more lighting, and slow shutter with much more exposure to get a cleaner lighter white but the following was the best I could do in a hurry.

| Calculating optical resolution | ||

| Ideal sample | Vertical sample | Horizontal sample |

Note how my resolution is peaked at the 400 lines marker. Since I'm doing 5 of these samples, the actual resolution is 5 times higher which means I can technically do about 2000 lines with my 8 megapixel digital SLR even under less than ideal conditions without an adequate exposure or tripod. I can assure you that a 4 megapixel digital camera wouldn't do nearly as well. Some may wonder what kind of uber geek would have time to do this kind of testing on actual optical resolution to decide what kind of camera they should buy; my answer is that you don't have to. There are places like Digital Photography review which does the most hardcore in-depth reviews of digital cameras I've ever seen such as this review of the Canon 400D and they've show you the actual sample images under all sorts of conditions.

[Next page - Other things to avoid when testing camera resolution]

Other things to avoid when testing camera resolution

3: Any resolution test should never use a lossy compression format like JPEG if we're trying to see what the best quality can be. TIFF at the very least or RAW image format should be used. [Update 11:00 PM - I should have been more specific here that it was David Berlind who used the JPEG format and not David Pogue]

4: Lens and motion issues must be kept to a minimum. The slightest bit of motion or off focus would prevent light from converging properly on the image sensor. You could easily have an effective optical resolution that would only justify 4 megapixels rather than the 16 megapixel sensor you're actually using because you shifted the image the length of one pixel or the lens was out of focus and the light only converged on to 2x2 pixels. You are therefore looking at the limitation of the lens or inadequate shutter speed which causes blurring rather than the limitations of the image sensor. No matter how many quality megapixels the camera has, it's that old saying of garbage in garbage out.

5: Not all digital image sensors are created equal. A dirt cheap camera with a supposed 4 megapixel sensor won't be the same as a digital SLR with a 4 megapixel sensor. So it's not just the number of megapixels, it's the quality of the megapixels that count too. The more accurate way of measuring resolution is to look at optical resolution using the EIA test charts. If I had my way I'd ban megapixel advertising and demand optical resolution specifications using a standardized target under a standardized set of lighting conditions in terms of how many lines can be displayed. That would tell you the true resolution capability rather than the theoretical capability in megapixels which may or may not be accurate.

6: And finally one of the biggest myths about megapixels that people make is the assumption that megapixels somehow equal resolution. One naturally assumes that 8 megapixels must be twice as good or twice the resolution as something that's 4 megapixels. That's simply not true because twice the vertical and horizontal resolution of a 4 megapixel image is 16 megapixels. Megapixels are a unit of area and not a unit of resolution. You can't expect something to have doubled the resolution unless there are 4 times the megapixels. You can't expect something to have 4 times the resolution unless there is 16 times the number of megapixels. You'd have to have a 64 megapixel camera to have quadrupled the resolution of a 4 megapixel camera.

7: If you want semi-professional grade images to print at 8x12 at 300 DPI, you need 8.64 megapixels to do an exact translation. A 9x13.5 image (which costs about $2.50 to print in a one hour shop) at 300 DPI will require roughly 11 megapixels which is what newer digital SLRs under $1000 can deliver these days. Of course if you want to be able to crop and/or down-sample then the more pixels you have the better but anything over 11 megapixels at this time gets to be very expensive and puts you in the $4000+ range.

So in conclusion, megapixels absolutely do matter though only the square root of that number will give you an accurate idea of how much theoretical resolution you're getting. The quality of the sensor and other factors such as the lens and the person operating the camera plays a huge role in the quality of your images. The biggest factor that is completely outside the control of the camera is the amount of quality light hitting the subject and lower light levels will always produce more noise than bright daylight images. For example, daylight is about 1000 times stronger than indoor lighting which is why it's possible to see shutter speeds of 1/1000th of a second outside and only get 1 second shutter speeds indoors.

The point is that we cannot make bold declarations that megapixels are a "big fat lie" especially when the test methodology and testing is completely unscientific. We're fortunate to have technology that keeps getting better and cheaper and it's not uncommon to find 10+ megapixel cameras under $800 these days and 8 megapixel cameras for less than $400. Don't base your buying decision on unscientific slogans; the smart advice is to read the in-depth reviews before you buy because the more educated you are the less likely you are to get ripped off.