These patterned glasses are all it takes to fool AI-powered facial recognition

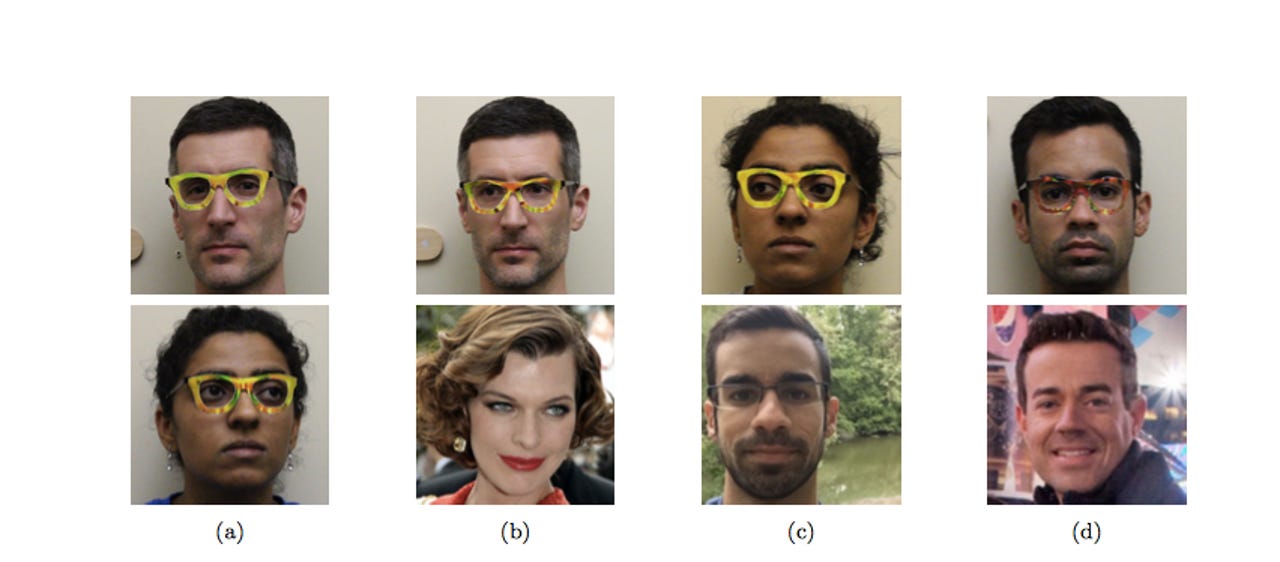

The researchers have shown how it's possible to perturb facial recognition with patterned eyeglass frames.

Researchers have developed patterned eyeglass frames that can trick facial-recognition algorithms into seeing someone else's face.

The printed frames allowed three researchers from Carnegie Mellon to successfully dodge a facial-recognition system based on machine-learning 80 percent of the time.

Using certain variants of the frames, a white male was also able to fool the algorithm into mistaking him for movie actress Milla Jovovich, while a South-Asian female tricked it into seeing a Middle Eastern male.

The research may offer relief to anyone concerned by the increasing use of facial-recognition technology by law enforcement. A recent study found that facial-recognition databases used by US law enforcement contained images of 117 million US adults, or roughly half the US adult population.

However, the findings could also spell trouble for security and public safety systems that rely on facial recognition.

The patterned frames are less conspicuous than other attempts to trick facial-recognition systems, such as printing a mask, which in any case can be detected due to a lack of movement in the wearer's face. And while devices such as Privacy Visor frames are inconspicuous, they cost $240.

The Carnegie Mellon method costs just 22 cents per frame, making it a much more viable approach for a large-scale attack on a facial-recognition algorithm, with the potential to subvert surveillance and authentication systems that rely on the technology.

Mahmood Sharif, one of the researchers, told New Scientist that the frames could be refined with a normal tortoiseshell pattern, making them even less obvious to anti-spoofing technology.

The researchers argue their method of attacking facial recognition is important for two reasons.

"First, such attacks can be particularly pernicious, since they will be resistant to at least cursory investigation. Hence, they are a particularly important type of attack to investigate and learn how to defend against," they argue.

"Second, such attacks help the perpetrators, whether malicious or benign, achieve plausible deniability. A person seeking merely to protect her privacy against aggressive use of face recognition by merchants will plausibly be able to claim that any failure of an ML algorithm to recognize her is due to error or chance rather than deliberate subterfuge."

However, donning the frames probably won't allow anyone to evade facial-recognition systems in use today.

The research was conducted against machine-learning algorithms the researchers had access to, or so-called "white-box" facial-recognition systems. But the group also tested the impersonation attack against cloud-based facial recognition service Face++ and claims to have succeeded, despite its rate-limiting defenses.