Facebook to ban political ads, limit content sharing on Messenger ahead of 2020 election

Facebook said Thursday that it's taking additional steps to eliminate misinformation on its platforms ahead of the November US election.

Featured

The social media giant said it will stop accepting new political ads in the week before the election, remove posts that claim people will contract coronavirus if they vote in-person, attach labels to content that tries to delegitimize the outcome of the election or claim election fraud, and block posts from candidates and campaigns that try to declare victory before official election results are available.

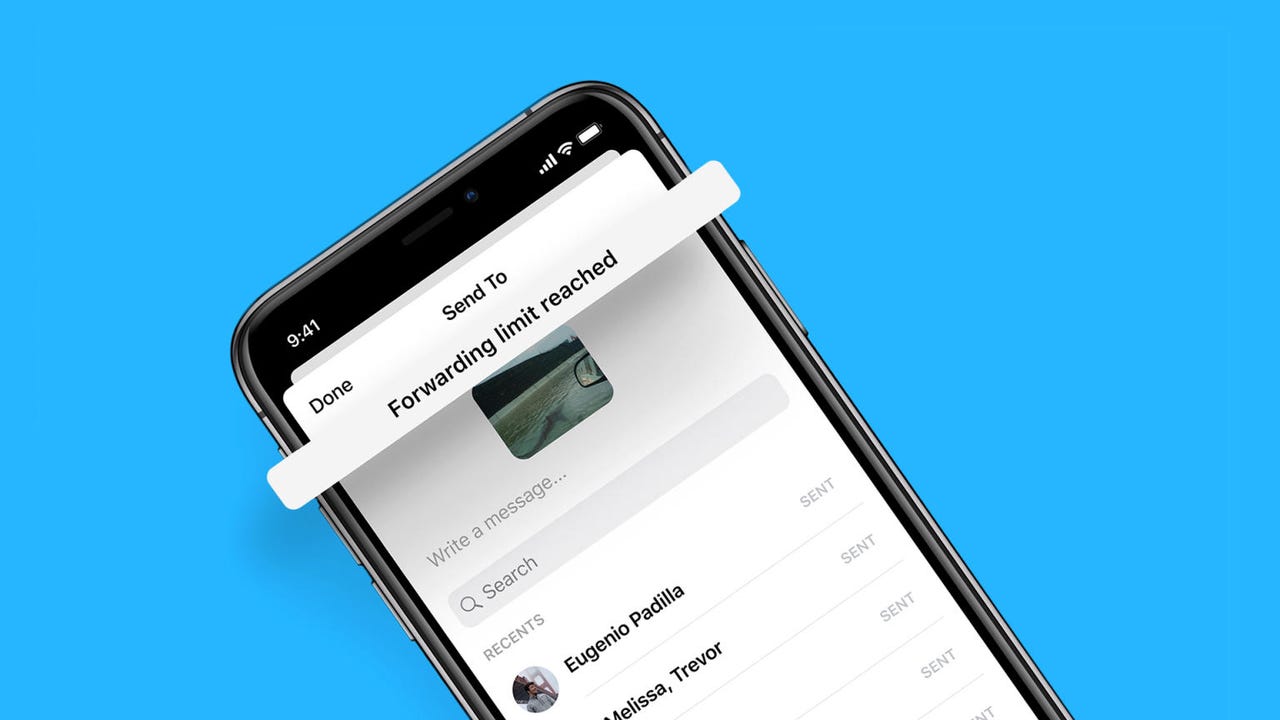

Meanwhile, Facebook is also introducing a forwarding limit on Messenger. In the run-up to the election, Facebook said forwarding on Messenger will be limited to five people or groups at a time "to help curb the efforts of those looking to cause chaos, sow uncertainty or inadvertently undermine accurate information."

More on technology and elections:

- Facebook announces election changes, hate speech fight as advertisers pull out

- Microsoft to deploy ElectionGuard voting software in first real-world test

- How to build a better voting system that resists hacking

The announcements mark the latest in a what's become a years-long effort by Facebook to rebuild user trust damaged by the Cambridge Analytica scandal and ease fears that the platform has become a tool for spreading Russian propaganda starting with the 2016 US presidential election.

The focus on political ads in particularly noteworthy, considering Facebook's previous resistance to blocking political ads and ad targeting. While the company did impose stricter rules on political advertisers earlier this year, Facebook said it would not block political ads or limit ad targeting because "people should be able to hear from those who wish to lead them". Facebook's approach to political ads was seen as a stark contrast to Twitter, which announced at the end of last October that it would stop publishing political ads on its platform.

Facebook has also been less willing than Twitter to restrict controversial content coming from political leaders. Twitter famously slapped fact-checking links on Donald Trump's tweets that claimed mail-in voting would result in a "rigged election," leading to Trump accusing Twitter of "interfering" with the 2020 presidential election.

Twitter also took action on a Trump tweet that appeared to incite violence against protestors, noting that it breached its policies on glorifying violence. Facebook CEO Mark Zuckerberg specifically declined to enforce similar action when the content was reposted on Facebook, prompting several of his employees to stage a "virtual walkout" in protest.

However, Facebook has been more aggressive in its approach to misinformation related to the COVID-19 pandemic. Last month, the company removed a video posted from the Facebook page of Donald Trump for violating its COVID-19 misinformation policies. The social network said the consequences for violating its standards vary depending on the severity of the violation and the person's history on the platform.

RELATED:

- Voting during 2020 election: What you need to know about vote by mail, online ballots, polling places CNET

- With vote by mail under fire, election officials seek help from SaaS ballot tracking

- Facebook and Twitter suspend Russian propaganda accounts following FBI tip

- Facebook pulls video from Trump's page labelling it as COVID-19 misinformation