Google Soli: Palm-sized radar could rev up checkouts by telling apples from oranges

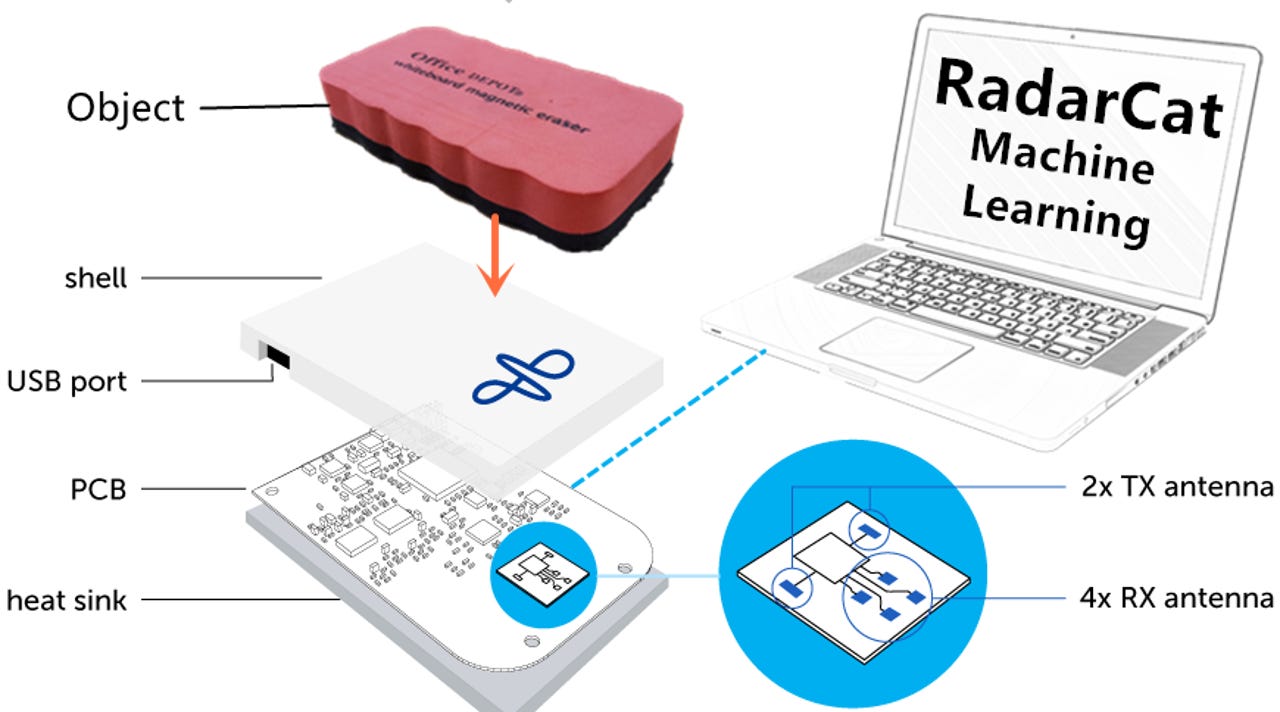

Employing machine learning, RadarCat uses Google's Soli radar sensors to recognize and distinguish between objects.

Researchers from St Andrews University in Scotland have used Google's mini radar for mobile gesture control to create RadarCat, a classification machine that can accurately distinguish between objects in real time.

Rather than use cameras to capture objects in view, the RadarCat machine combines recognition algorithms with radio waves beamed from Google's Soli radars to identify objects based on unique traits reflected back to its sensor.

The mini radar was developed by Google's ATAP team and unveiled at the 2015 IO as Project Soli. The Soli sensors are capable of recognizing fine gestures, such as rubbing two fingers together, when held in front of the radar.

However, the St Andrews University researchers also found that placing different objects directly on the radar unit reflected stable and unique signals. With the aid of machine-learning algorithms and classification techniques, RadarCat is able to recognize various objects and distinguish between them.

Being radar, the system can also look beneath non-shiny materials to improve its chances of recognizing an object as well as analyze materials.

"We leveraged improved knowledge and analysis of radar signal signatures, machine learning and classification results in the development of our final approach," the researchers write in a new paper describing the tech.

"The signatures, unique to each object, are measured when the object is proximate to the sensor, and allows us to classify the object using a machine-learning technique," they added.

The video demonstration below shows RadarCat immediately recognizing different objects, including books, phones, fruit, glasses of water, and more.

The RadarCat and Soli kit could be useful for a range of purposes, from assisting vision-impaired people navigate their surroundings, to speeding up retail self-checkouts by identifying goods, separating recycling or integrating with other industrial processes.

As they note, the RadarCat can look at materials and objects without breaking the object, or requiring, say, RFID tags. Additionally, since Soli is super compact, RadarCat could be a highly-portable recognition system.

It's not clear yet how expensive Soli chips will be, but that may be revealed in the middle of next year when Infineon and Google plan to launch Soli to the market.

Given Google's recent moves on its Home smart speaker, it wouldn't be surprising to see Soli's gesture capabilities exploited in a future model.

READ MORE ON AI

- Microsoft releases beta of version 2.0 of its Cognitive Toolkit to 'democratize AI'

- IBM's Watson AI could soon be in devices from PCs to robots, thanks to Project Intu

- Lost in Google Play? Now AI helps you pick out the right Android app

- Future enterprise companies will be run by robots

- Google Assistant is starved for conversation

- TechRepublic: Why AI and machine learning are

- Google's DeepMind turns to StarCraft II after conquering Goso hard, Facebook and Google weigh in

- CNET: AI's just not that into you -- yet