Why you don't need a SAN any more

Since Windows Server 2012 introduced Storage Spaces, software-defined storage (SDS) has been moving up the stack: it's gone from an acceptable alternative to the kind of RAID systems you find in NAS boxes to competing with full-featured SANs using the Scale-Out File Server (SoFS) role on commodity servers and storage. The StorSimple appliances and Azure Storage services bridge the gap to iSCSI. And in Windows Server vNext you get more SAN features like storage quality-of-service and storage replication. At this point, you need a good reason to be picking a proprietary SAN, even for high-end storage.

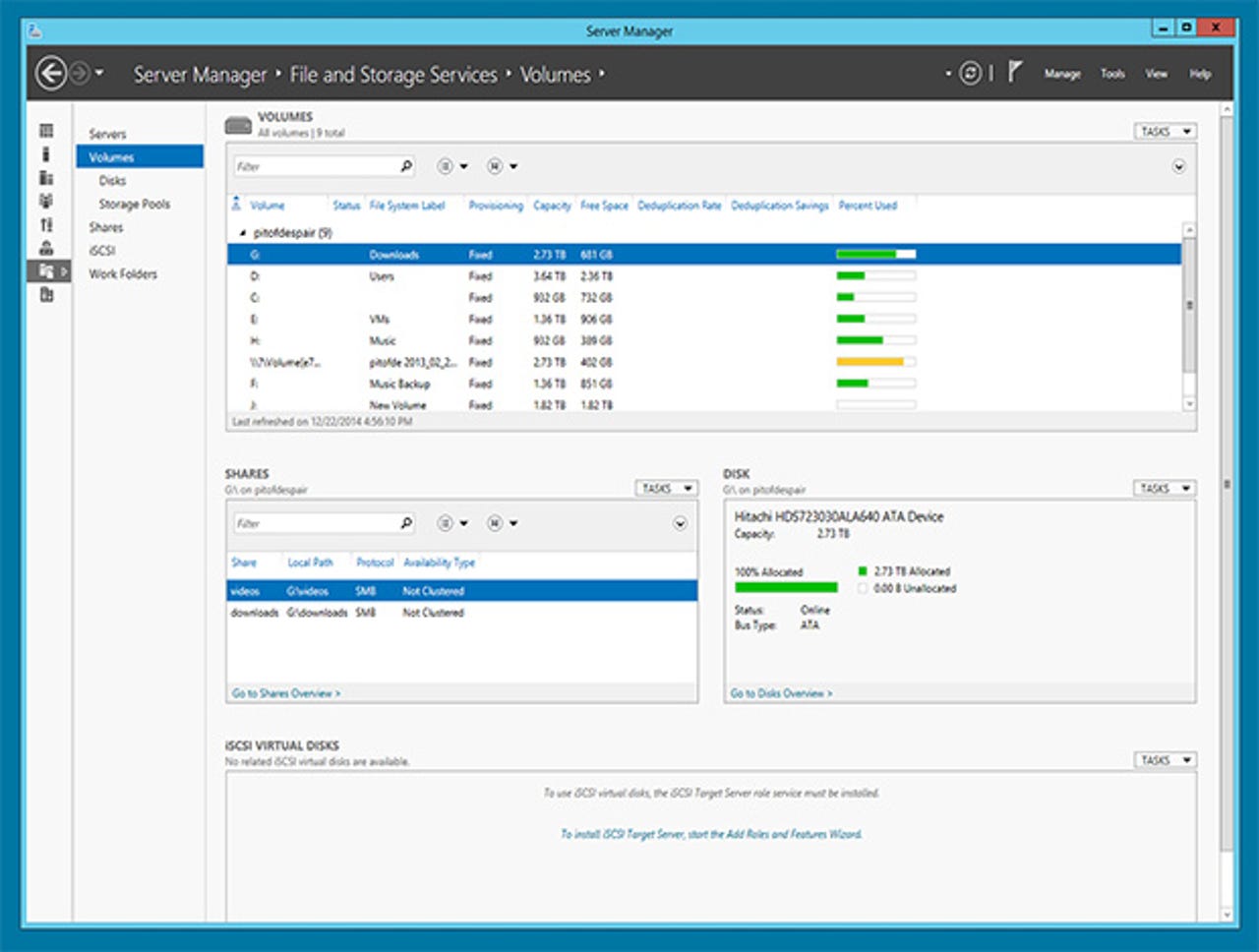

Pooling your disks: Storage Spaces and tiers

The heart of Windows Server's storage tools is Storage Spaces, its built-in storage virtualisation tools. Storage virtualisation has long been the province of high-end storage arrays, like those from HP and EMC, but Windows Server brings its key features to the average departmental server, not just to the data centre.

It doesn't matter whether you're using directly attached or network storage; as long as you're using a block-level protocol and have direct access to your disks, you can quickly build pools of storage that can be used to build virtualised storage -- either a fixed size or a thin-provisioned pool. You can create clustered pools, with failover, as well as standalone pools. Clustered pools are limited to 80 physical disks, as they need to handle failover between nodes, while you can have up to 240 disks in a standard pool, in four 60-disk JBODs.

Once pools are in place they can be configured as Storage Spaces. A high-level storage virtualisation service, Storage Spaces can configure sections of disk pools as either simple spaces, mirror spaces or parity spaces. Simple spaces are much like traditional disks, with no resilience, and are best used for temporary data -- think of them as scratch working storage. Mirror and parity spaces add resilience (especially when used with Microsoft's new ReFS file system). Mirrors are a powerful tool, and there's support for up to three-way mirroring, using enclosure awareness to associate data copies with specific JBODs. With three-way mirroring, and three or four JBODs you can lose an entire enclosure and still keep running.

Storage Spaces can also improve performance, by using striping to store data across multiple disks. If you're mirroring data, this can improve performance considerably, allowing data not only to be retrieved from multiple disks in parallel, but also from multiple JBODs. To keep your system easy to maintain, you should standardise on one type of disk in a JBOD (and ideally one specific firmware release).

Microsoft uses Storage Spaces for one of its most demanding storage scenarios: the Windows release server, where it was able to significantly increase capacity and storage throughput at less than half the cost of the SAN it used to use.

Featured

When you need more performance from your storage, Windows Server 2012 R2's built-in storage tiering is a powerful tool that takes advantage of hardware support in Storage Spaces to segregate SSD from HDD storage. Once set up, data is automatically tiered between SSD and HDD, with your most frequently used data stored on SSD for faster access. As data ages, it's automatically moved to HDD. There's no user intervention needed once you've set up your storage pool and defined the disks used for each tier -- you just need to be sure that you've used fixed provisioning for the storage pool.

Scaling out to a storage fabric

An important element of Microsoft's storage story is its SMB protocol, the heart of Windows file sharing. A new version, SMB 3.0, arrived with Windows Server 2012, adding support for many key software-defined storage requirements. These include support for transparent failover between clustered storage nodes, and direct access to network adapters for faster data transfer. There's also now support for end-to-end encryption, making it harder for your data to be intercepted while in motion. To get the most from the latest version, SMB 3.02, you're going to need make sure that you're using network cards that support RDMA access.

That's an important point: getting the most from any of Windows new storage features may mean investing in new hardware. That puts up the price, but it's commodity hardware that's still cheaper than buying a third-party system, and won't require additional storage management software.

Bring all the new Windows Server storage features together, and you've got the Scale-Out File Server. Using Windows clustering features, SoFs lets you share the same folder from all the nodes of a cluster -- with changes in any one instance replicated to the rest. As calls can be routed to any node, you get faster access to data on busy servers, as well as the ability to be running disk checks and other resource-intensive tasks on one node at a time, while the others carry on delivering data.

Scale-Out File Server is the logical endpoint of Windows Server 2012 R2's software-defined storage. It's fast, flexible, and cheaper than the SAN alternatives. It might not be for every network, but it's also something you can build up to, as you start to use Storage Spaces and then add clustering to your network. The end result is a storage fabric, much like that used by Azure -- and ready for your own private cloud. While it's not suitable for all workloads in this version (especially not SharePoint and other document-centric services), Scale-Out File Server is ideal for hosting virtual machine images and virtual hard disks, for handling databases and for hosting web content.

To the cloud

Microsoft's StorSimple appliances take a different approach to storage networking, offering massive amounts of storage while taking up very little physical space. That's because they're actually a mix of hardware and software, automatically tiering your least-used data onto Azure.

The latest StorSimple 8000 series hardware uses iSCSI to connect to your existing server, with a mix of SSD and HDD storage. As data moves down the tiers, it's automatically deduped, with the lowest-priority data transferred to cloud storage, with the option of up to 500TB stored in Azure blob storage. Local storage is what you'd expect from an iSCSI storage appliance -- with 40TB or so of available disk space (and more if your data can be deduplicated and compressed), but it behaves like an array that gets bigger without you having to buy more drives.

The real advantage of the latest StorSimple appliance is that it acts as a bridge between your on-premises storage and the cloud. An Azure-hosted StorSimple virtual appliance comes with your subscription, and is managed from the same Azure portal as your on-premises arrays. Data uploaded to Azure and managed with the StorSimple virtual appliance can be accessed by Azure IaaS VMs, for disaster recovery or as part of a cloud migration. You get storage that behaves like a SAN and a cloud service at the same time.

Taking software-defined storage further

When the next version of Windows Server comes out (probably in fall 2015), it will include even more software-defined storage tools (as well as using the next version of SMB, probably 3.11).

Even the SoFS role is currently limited in terms of how far you could scale out. A new feature, Storage Replica does the kind of synchronous replication you'd use to keep two storage arrays continuously in sync with the guarantee that you don't lose data, but with more flexibility. You'd usually need to have software running on your cluster to have SAN replication do automatic failover when something goes wrong -- and you always need to think about the distances involved. Because if it's too far, the latency and delayed writes will slow down your applications too much to be practical. Plus you tend to need near-identical SAN setups for synchronous replication.

Storage Replica works between almost any kind of storage -- including a laptop hard drive or a JBOD, server storage or your existing SAN -- using SMB 3.1 transport; it's volume based and runs at the block rather than file level (so it doesn't matter if files are in use).

You can use it for synchronous replication to get always in-sync, but if you need to stretch over a longer distance and you can cope with crash-consistent volumes and possible data loss, you can use the same system for asynchronous replication. That means you can stretch a cluster across two sites to get high availability and disaster recovery. Storage Replica even replicates volume snapshots. And it doesn't need complex infrastructure -- just Active Directory and at least 1Gbps networking between your servers, ideally with SSDs to store the logs. You can also use the new Cloud Witness feature to use Azure as the required vote for a cluster quorum that you need for a stretch cluster, instead of adding extra hardware.

Microsoft is also planning to add support for a Scale-out File Server role that works without shared storage, and lets you use direct attached SATA drives to save money. That would be ideal for storing virtual machines, backups and as a replica target,and again will use SMB 3 for the storage network fabric. With storage resiliency that manages transient disruptions to storage -- saving the state of a virtual machine and pausing it until the storage is working normally again -- you'll be able to run virtualised workloads on ever-cheaper storage hardware.

Windows Server 2012 R2 added Storage Quality of Service (QoS) to Hyper-V, but in the next version it gets much more effective. The new algorithm is based on both Microsoft's experience managing storage in Azure and on research by MSR. Typically, QoS is based on network rate limits and workload classification, but it doesn't do well at helping you meet storage SLAs when you have the 'noisy neighbour' problem with one workload hogging shared storage. Microsoft is taking a new approach that assigns costs to storage operations based on the hardware, the workload, bandwidth and latency, and then dynamically partitions the storage traffic using a centralised controller to do a better job of making sure each virtual machine gets a fair share of storage bandwidth.

AR + VR

It works on Hyper-V and will be turned on by default for Scale-Out File Server workloads. You can set QoS policy for both IOPS and latency that applies to an individual VHD, to a VM, to a service or to a whole workload. That means you don't have to work out how to make demanding applications play well together; you can just set the maximum or minimum IOPS you need from your storage for a particular workload and Windows Server will automatically prioritise and throttle virtual machines as necessary to make sure you get it.

It doesn't matter if the problem VM is one you haven't set policy for; Windows Server will still manage it, to make sure you get the storage you need on the workloads you're protecting with policy. In the Technical Preview you use PowerShell for storage QoS; at launch, System Centre will also have tools for this. That means you can virtualise, distribute and protect your storage in the same system from which you're managing your virtual machines and workloads. Again, that's another advantage over a proprietary SAN, where you'll probably end up using dedicated storage management software as well as your other tools.

Windows Server 2012 R2 is already a very capable replacement for many SAN scenarios, as well as making virtualised storage simple and cheap enough for small businesses. With the next version, it gets more sophisticated, for both on-premises and hybrid cloud storage options -- and you can still do it all on commodity hardware.