Cambridge robot reads emotions

Mind-reading robots

The Rainbow Research Group at the University of Cambridge, which looks at how interactions between people and computers can be improved, has been conducting a series of experiments with robots developed to detect, analyse and respond to human emotions.

In a University of Cambridge video released in December, the Rainbow team described its work on developing 'mind-reading machines', or computers that infer people's emotional states by monitoring facial expressions and body language.

"The problem is that computers don't react to how I feel," professor Peter Robinson of Cambridge University says in the video. "Whether I'm pleased or annoyed, they just ignore me."

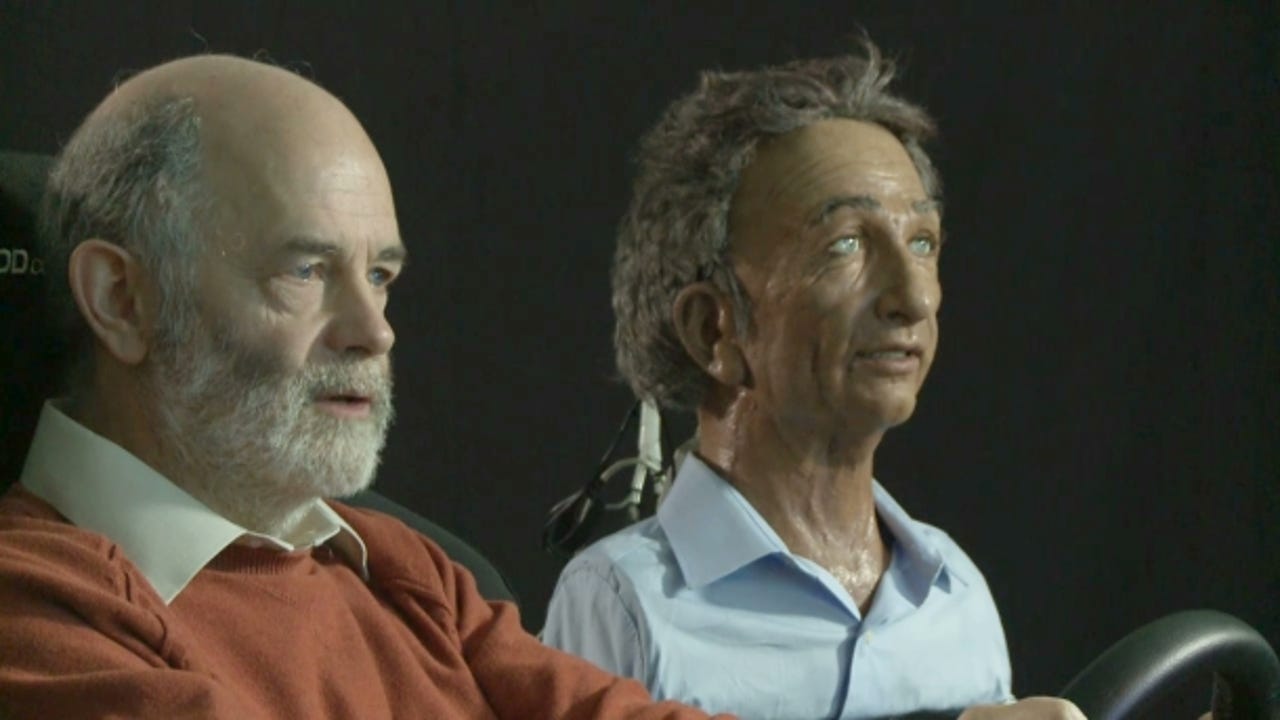

In this picture, Robinson (left) demonstrates interaction with Charles, a direction-giving robot, in a driving simulation.

In the test, Robinson pretends to be vaguely disquieted about a simulated build-up of traffic and suggests an alternative route. Charles, the navigator, agrees to the route change and expresses mild surprise at Robinson's positive reaction.

Robot's camera eyes

Charles has cameras in his eyes to monitor expressions on human faces, and 24 motors controlling facial expressions.

"The way Charles and I can communicate shows us the future of how people are going to interact with machines," Robinson said.

Separate systems in Charles monitor expressions, gestures and body movements.

Robot analyses voices

The cameras in Charles's eyes track feature points on faces. A computer then calculates facial expressions and head gestures such as nodding, and interprets the movements as combinations of 400 predefined mental states.

A separate system analyses tone of voice — the tempo, pitch and volume — and interprets these using the same list of mental states.

The system also monitors human body movements — for example, an expansive gesture could be interpreted as indicating anger.

According to Robinson, the system gives over 70 percent reliable interpretations of human emotions, approximately the same as most humans.

Robot 'emoting' computer system

Robinson's 'emoting' computer system uses multiple disciplines as a basis. Computer science, theory of mind, studies of autism and probability theory have all fed into the research. People on the autism spectrum often have difficulties in interpreting emotions in other humans.

In this picture, Robinson's movements are mapped onto those of an avatar.

Robot Elvis

Robinson's robots have gone through various prototypes and test states. Robinson started with Virgil the chimp, which people responded to. The next next prototype was Elvis, whose eyes can be seen here.