Google I/O: From 'AI first' to AI working for everyone

More Google I/

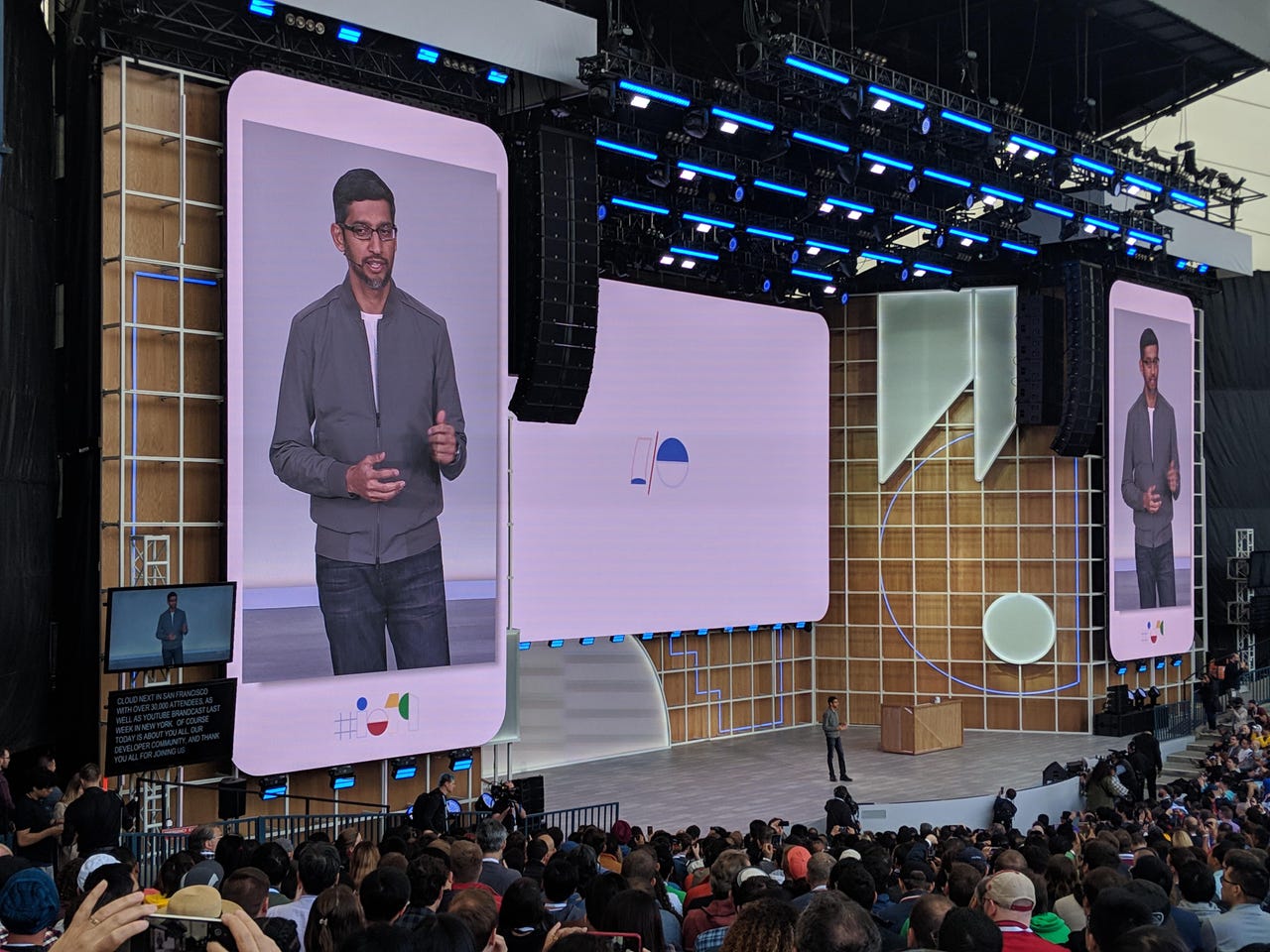

Thanks to advances in AI, Google is moving beyond its core mission of "organizing the world's information," Google CEO Sundar Pichai said Tuesday.

Also: The Pixel 3A is official: Here's what you need to know | Android Q: Everything you need to know

"We are moving from a company that helps you find answers to a company that helps you get things done," Pichai said in his Google I/O keynote address. "We want our products to work harder for you in the context of your job, your home and your life."

Two years ago at the annual developer conference, Pichai laid out Google's "AI first" strategy. On Tuesday, he laid out ways AI is having a significant impact across across all of its platforms, products and services.

From operating systems and apps to home devices and in-vehicle interfaces, Google is infusing AI everywhere. The idea is that AI will help people be more productive in all aspects of their lives, whether they're in a corporate setting or need help reading signs on the subway. With Google Assistant at the core of many announcements, including the next-generation Duplex, it's clear how new AI capabilities will bring more value to many of Google's products -- and potentially help Google build up its sources of revenue beyond ad sales.

Must read

- Google's next-gen Assistant is 10x faster and knows where your mom lives (CNET)

- Google Duplex, but way less creepy and more useful (CNET)

- Google brings AR and Lens closer to the future of search (CNET)

Pichai opened up the conference by showcasing how machine learning is enabling new features in Search. For instance, Google is bringing its "Full Coverage" News feature to Google Search to better organize news results in Search. If a user searches, for instance, for "black hole," machine learning will help identify different types of stories and give them a complete picture of how a story is being covered.

Google is also expanding the capabilities of Lens -- the AI camera tool that debuted at last year's I/O -- to connect more things in the physical world with digital information. So for instance, a user could point their Lens-enabled device to a restaurant menu to pull up pictures of the menu items. Or they could point Lens to a recipe in Bon Apetit magazine to see the page come alive. Google said it's working with partners like retailers and museums to create these kinds of experiences.

To ensure AI camera features truly work for everyone, Google announced it has integrated new text translation and speech capabilities into Google Go, the Search app for entry level devices. A user can simply point the app at a sign to have it read aloud to them. Users can also have the app translate text and then read it aloud in their preferred language.

The feature currently works in more than a dozen languages. It leverages text-to-speech, computer vision and Google's 20 years of language understanding from Search. Compressed into just over 100 KB, it can work in phones that cost as little as $35, so it's accessible to the more than 800 million adults around the globe who struggle to read.

Must-see offers

AI is also driving new on-device tools -- some that are geared toward improving accessibility for disabled users. For instance, a tool called Live Relay helps deaf people use the phone, even if they prefer not to speak. Running completely on the device -- with the private conversation remaining in the user's hands -- Live Relay will translate speech from incoming calls into text. Then, it translates the user's text responses into speech. It starts by alerting the person on the other end of the line that they're speaking with the user's virtual assistant.

Google is also exploring the idea of personalized communication models that can understand different types of speech. Google researchers are collecting speech samples from users who are hard to understand -- such as people who stutter or who have suffered from a stroke -- to train new voice recognition models. The research project, called Project Euphonia, should inform Google Assistant voice recognition models in the future.

Google also showcased how is virtual assistant powers its hardware, including its expanded family of smart home devices. Google announced it has rebranded the Google Home family under the Nest name and is debuting the Next Hub Max. Designed for communal spaces like the kitchen, the Next Hub Max has a camera and a larger 10-inch display. It can effectively function has a television, a digital photo frame, a smart home controller and more, Google said. It launches later this summer for $229.

Google I/O 2019: The biggest announcements from the keynote

More from Google I/O:

- Google expands Android Jetpack, other Android development tools

- Google I/O: 14 Android OS modules to get over-the-air security update

- Google expands Android Jetpack, other Android development tools

- Google makes Cloud TPU Pods publicly available in beta

- Google sees next-gen Duplex, Assistant as taking over your tasks

- Google says it will address AI, machine learning model bias

- The Pixel 3A is official: Here's what you need to know

- Google's Pixel 3a's specs, price, features have near perfect timing

- Google expands ML Kit capabilities for building ML into mobile apps

- Google expands UI framework Flutter from just mobile to multi-platform

- Google I/O 2019: The biggest announcements from the keynote