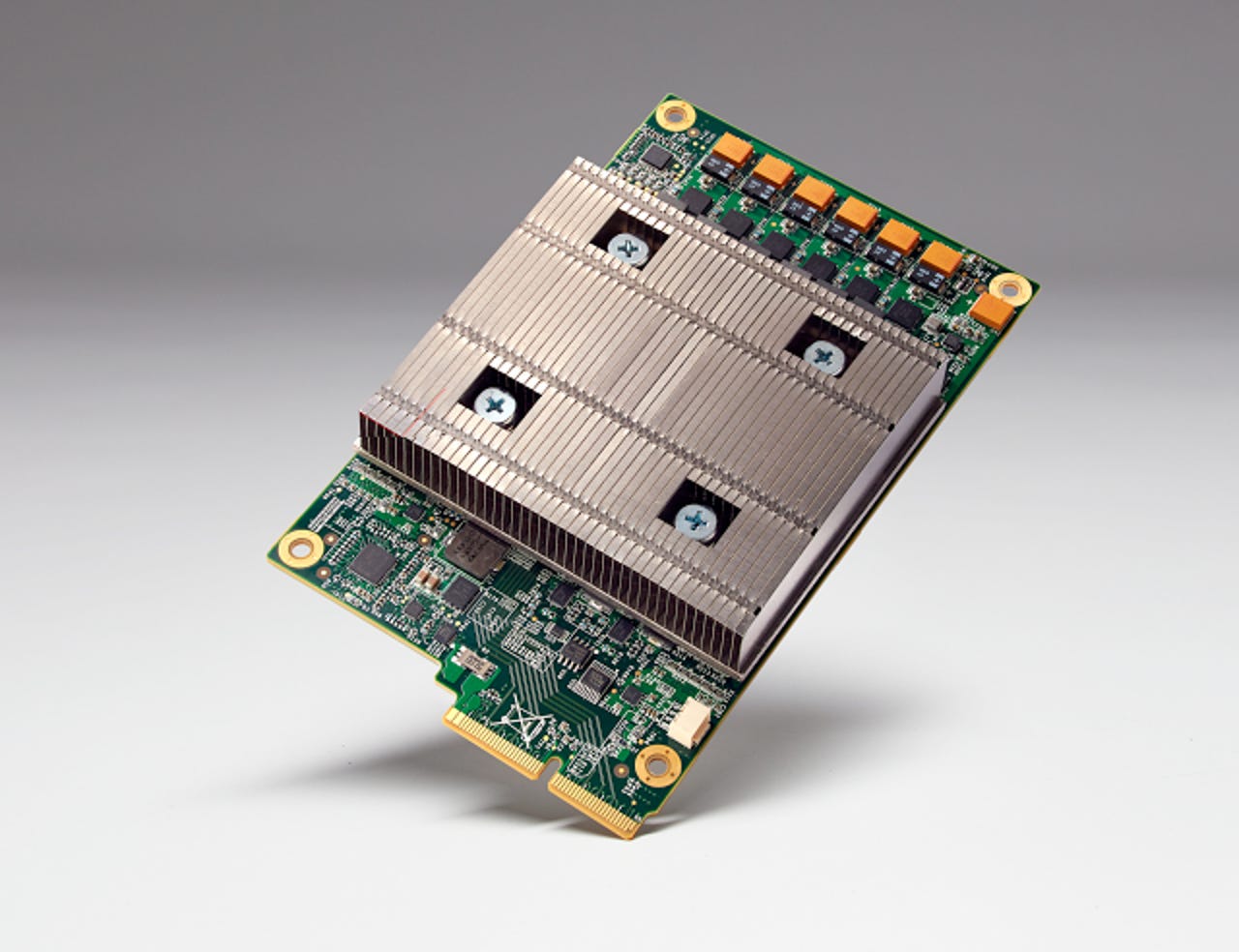

Google I/O: Custom TPU chip amplifies machine learning performance

Google on Wednesday revealed that for the past year, it's been powering data centers with a custom-built Tensor Processing Unit (TPU) chip designed for machine learning and tailored for TensorFlow.

Google started working on the chip because "for machine learning, the scale at which we need to do computing is incredible," Google CEO Sundar Pichai said in the keynote address at the Google I/O conference.

The result, the company announced in a blog post, is that they "deliver an order of magnitude better-optimized performance per watt for machine learning. This is roughly equivalent to fast-forwarding technology about seven years into the future (three generations of Moore's Law)."

Google I/O 2016: Scenes from the developer conference

Because the chip is tailored for machine learning, it's more tolerant of reduced computational precision and requires fewer transistors per operation.

"We're innovating all the way down to the silicon to really bring unique capabiitlies to enterprise," Greg DeMichelle, director of product management for the Cloud Platform, told reporters Wednesday at a presentation on the sidelines of the Google I/O conference.

This level of performance, he said, can help an enterprise "find the nuggets of information that make a difference between a 10 percent growth in business versus a 50 or 60 percent growth."

In other words, as Pichai said in the keynote, "When you use the Google Cloud platform, you not only get access to the great software we use internally, you get access to specialized hardware we build internally."

TPUs are already being used to improve search results and Street View and to improve the accuracy of maps and navigation, among other things. And when Google's AlphaGo took on Go world champion Lee Sedol, it was powered by TPUs.