Google preps TPU 3.0 for AI, machine learning, model training

Featured

While Google is focused on artificial intelligence and making Google Assistant smarter and more conversational, the company needs processing horsepower to make it happen. Enter: TPU 3.0.

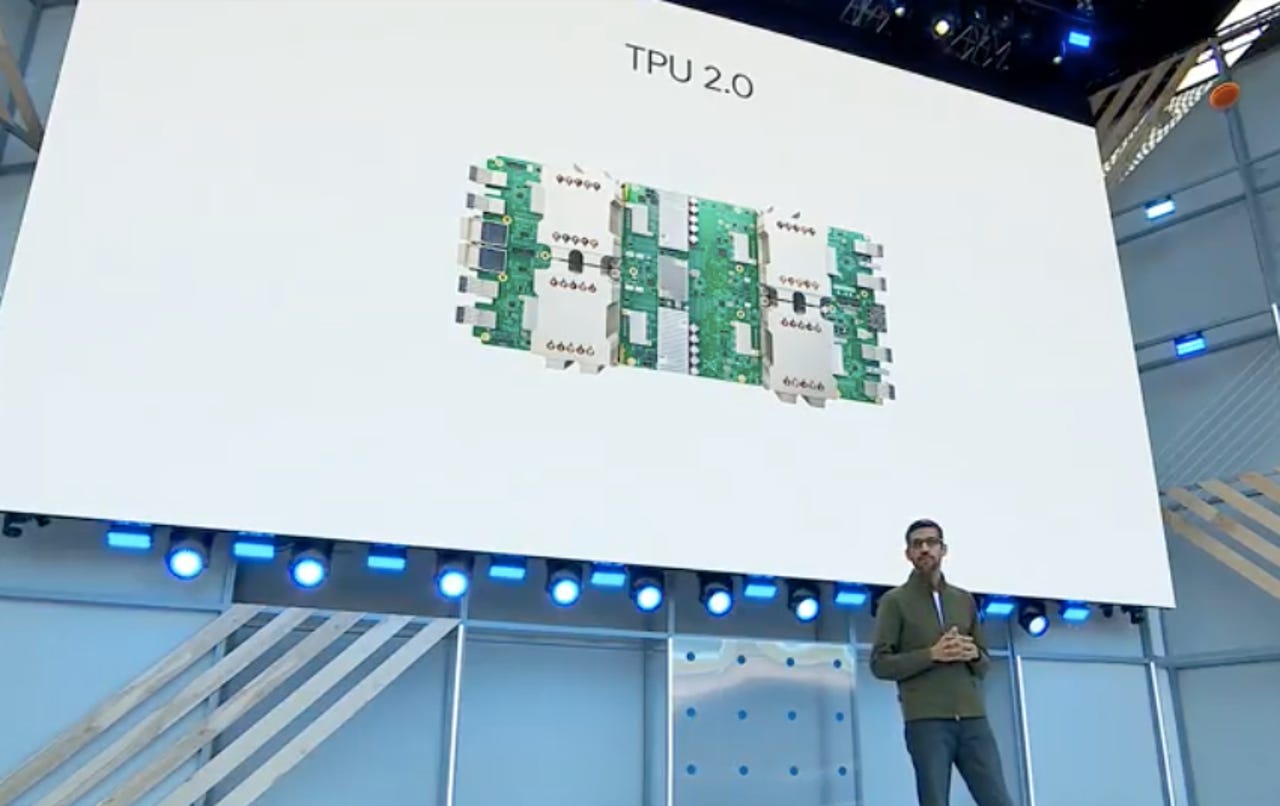

Google CEO Sundar Pichai outlined TPU 3.0, short for the third version of the Tensor Processing Unit. TPUs are Google's custom application specific processor to accelerate machine learning and model training.

These TPUs handle TensorFlow workloads, which are used by researchers, developers, and businesses. TPU 3.0 will be primarily consumed through Google Cloud.

Read also: Google Assistant now connects to over 5,000 smart home devices

Pichai didn't serve up a ton of detail about TPU 3.0 pod other than to say they are eight-times more powerful than the TPU 2.0 pod announced a year ago.

"These chips are so powerful that for the first time we had to introduce liquid cooling to our data centers," said Pichai. TPU 3.0 can handle up to 100 petaflops of machine learning compute.

As for use cases, TPUs are used by Google to develop models with large batch sizes.

Pichai outlined Google Assistant improvements as well as some key research areas such as healthcare and predicting outcomes. One early example of Google AI revolved around predicting health risks form retina scans.